Data Ingestion & Knowledge Sources

100+ Prebuilt Connectors – Google Drive, Slack, Salesforce, GitHub, Pinecone, Qdrant, MongoDB AtlasMultimodal Embed v4.0 – Text + images in single vectors, 96 images/batch processingBinary Embeddings – 8x storage reduction (1024 dim → 128 bytes)⚠️ NO Native Cloud UI – Connectors require developer setup, not drag-and-drop

Document support – PDF, DOCX, HTML automatically indexed (Vectara Platform )Auto-sync connectors – Cloud storage and enterprise system integrations keep data currentEmbedding processing – Background conversion to embeddings enables fast semantic search

1,400+ file formats – PDF, DOCX, Excel, PowerPoint, Markdown, HTML + auto-extraction from ZIP/RAR/7Z archivesWebsite crawling – Sitemap indexing with configurable depth for help docs, FAQs, and public contentMultimedia transcription – AI Vision, OCR, YouTube/Vimeo/podcast speech-to-text built-inCloud integrations – Google Drive, SharePoint, OneDrive, Dropbox, Notion with auto-syncKnowledge platforms – Zendesk, Freshdesk, HubSpot, Confluence, Shopify connectorsMassive scale – 60M words (Standard) / 300M words (Premium) per bot with no performance degradation

Developer Frameworks – LangChain, LlamaIndex, Haystack, Zapier (8,000+ apps)Multi-Cloud Deployment – AWS Bedrock, Azure, GCP, Oracle OCI, cloud-agnostic portabilityCohere Toolkit – Open-source (3,150+ GitHub stars) Next.js deployment app⚠️ NO Native Messaging/Widget – NO Slack, WhatsApp, Teams, embeddable chat requires custom development

REST APIs & SDKs – Easy integration into custom applications with comprehensive toolingEmbedded experiences – Search/chat widgets for websites, mobile apps, custom portalsLow-code connectors – Azure Logic Apps and PowerApps simplify workflow integration

Website embedding – Lightweight JS widget or iframe with customizable positioningCMS plugins – WordPress, WIX, Webflow, Framer, SquareSpace native support5,000+ app ecosystem – Zapier connects CRMs, marketing, e-commerce toolsMCP Server – Integrate with Claude Desktop, Cursor, ChatGPT, WindsurfOpenAI SDK compatible – Drop-in replacement for OpenAI API endpointsLiveChat + Slack – Native chat widgets with human handoff capabilities

North Platform (GA Aug 2025) – Customizable agents for HR, finance, IT with MCPGrounded Generation – Inline citations showing exact document spans with hallucination reductionMulti-Step Tool Use – Command models execute parallel tool calls with reasoning⚠️ NO Lead Capture/Analytics – Must implement at application layer, no marketing automation

Agentic RAG Framework – Python library for autonomous agents: emails, bookings, system integrationAgent APIs (Tech Preview) – Customizable reasoning models, behavioral instructions, tool access controlsLlamaIndex integration – Rapid tool creation connecting Vectara corpora, single-line code generationMulti-LLM support – OpenAI, Anthropic, Gemini, GROQ, Together.AI, Cohere, AWS Bedrock integrationStep-level audit trails – Source citations, reasoning steps, decision paths for governance compliance✅ Grounded actions – Document-grounded decisions with citations, 0.9% hallucination rate (Mockingbird-2-Echo)⚠️ Developer platform – Requires programming expertise, not for non-technical teams⚠️ No chatbot UI – No polished widgets or turnkey conversational interfaces⚠️ Tech preview status – Agent APIs subject to change before general availability

Custom AI Agents – Autonomous GPT-4/Claude agents for business tasksMulti-Agent Systems – Specialized agents for support, sales, knowledgeMemory & Context – Persistent conversation history across sessionsTool Integration – Webhooks + 5,000 Zapier apps for automationContinuous Learning – Auto re-indexing without manual retraining

Open-Source Toolkit (MIT) – Complete frontend source code for unlimited customizationFine-Tuning via LoRA – Command R models with 16K training context for specializationWhite-Labeling – Fully supported via self-hosted deployments, NO Cohere branding⚠️ NO Visual Agent Builder – Agent creation requires Python SDK, not for non-technical users

White-label control – Full theming, logos, CSS customization for brand alignmentDomain restrictions – Bot scope and branding configurable per deploymentSearch UI styling – Result cards and search interface match company identity

Full white-labeling included – Colors, logos, CSS, custom domains at no extra cost2-minute setup – No-code wizard with drag-and-drop interfacePersona customization – Control AI personality, tone, response style via pre-promptsVisual theme editor – Real-time preview of branding changesDomain allowlisting – Restrict embedding to approved sites only

Command A – 256K context, $2.50/$10, 75% faster than GPT-4o, 2-GPU minimumCommand R+ – 128K context, $2.50/$10, 50% higher throughput, 20% lower latencyCommand R – 128K context, $0.15/$0.60, 66x cheaper than Command A outputCommand R7B – 128K context, $0.0375/$0.15, fastest and lowest cost23 Optimized Languages – English, French, Spanish, German, Japanese, Korean, Chinese, Arabic

Mockingbird default – In-house model with GPT-4/GPT-3.5 via Azure OpenAI availableFlexible selection – Choose model balancing cost versus quality for use caseCustom prompts – Prompt templates configurable for tone, format, citation rules

GPT-5.1 models – Latest thinking models (Optimal & Smart variants)GPT-4 series – GPT-4, GPT-4 Turbo, GPT-4o availableClaude 4.5 – Anthropic's Opus available for EnterpriseAuto model routing – Balances cost/performance automaticallyZero API key management – All models managed behind the scenes

Developer Experience ( A P I & S D Ks)

Four Official SDKs – Python, TypeScript/JS, Java, Go with multi-cloud supportREST API v2 – Chat, Embed, Rerank, Classify, Tokenize, Fine-tuning, streamingNative RAG – documents parameter for grounded generation with inline citationsLLM University (LLMU) – Learning paths for fundamentals, embeddings, SageMaker deployment

Multi-language SDKs – C#, Python, Java, JavaScript with REST API (FAQs )Clear documentation – Sample code and guides for integration, indexing operationsSecure authentication – Azure AD or custom auth setup for API access

REST API – Full-featured for agents, projects, data ingestion, chat queriesPython SDK – Open-source customgpt-client with full API coveragePostman collections – Pre-built requests for rapid prototypingWebhooks – Real-time event notifications for conversations and leadsOpenAI compatible – Use existing OpenAI SDK code with minimal changes

Command A Performance – 75% faster than GPT-4o, runs on 2 GPUsEmbed v3.0 Benchmarks – MTEB 64.5, BEIR 55.9 among 90+ modelsRerank 3.5 Context – 128K token window handles documents, emails, tables, codeGrounded Generation – Inline citations show exact document spans, reduces hallucination

✅ Enterprise scale – Millisecond responses with heavy traffic (benchmarks )✅ Hybrid search – Semantic and keyword matching for pinpoint accuracy✅ Hallucination prevention – Advanced reranking with factual-consistency scoring

Sub-second responses – Optimized RAG with vector search and multi-layer cachingBenchmark-proven – 13% higher accuracy, 34% faster than OpenAI Assistants APIAnti-hallucination tech – Responses grounded only in your provided contentOpenGraph citations – Rich visual cards with titles, descriptions, images99.9% uptime – Auto-scaling infrastructure handles traffic spikes

Trial/Free – 20 chat/min, 1,000 calls/month for evaluationProduction Pay-Per-Token – Command A $2.50/$10, R7B $0.0375/$0.15 (66x cheaper output)Production Unlimited Monthly – No monthly caps, 500 chat/min rate limit

Usage-based pricing – Free tier available, bundles scale with growth (pricing )Enterprise tiers – Plans scale with query volume, data size for heavy usageDedicated deployment – VPC or on-prem options for data isolation requirements

Standard: $99/mo – 60M words, 10 botsPremium: $449/mo – 300M words, 100 botsAuto-scaling – Managed cloud scales with demandFlat rates – No per-query charges

SOC 2 Type II + ISO 27001 + ISO 42001 – Annual audits, AI Management certificationGDPR + CCPA Compliant – Data Processing Addendums, EU data residencyZero Data Retention (ZDR) – Available upon approval, 30-day auto deletionAir-Gapped Deployment – Full private on-premise, ZERO Cohere infrastructure access⚠️ NO HIPAA Certification – Healthcare PHI processing requires sales verification

✅ Data encryption – Transit and rest encryption, no model training on your content✅ Compliance certifications – SOC 2, ISO, GDPR, HIPAA (details )✅ Customer-managed keys – BYOK support with private deployments for full control

SOC 2 Type II + GDPR – Third-party audited complianceEncryption – 256-bit AES at rest, SSL/TLS in transitAccess controls – RBAC, 2FA, SSO, domain allowlistingData isolation – Never trains on your data

Observability & Monitoring

Native Dashboard – Billing/usage tracking, API key management, spending limits, tokensNorth Platform – Audit-ready logs, traceability for enterprise complianceThird-Party Integrations – Dynatrace, PostHog, New Relic, Grafana monitoring⚠️ NO Native Real-Time Alerts – Proactive monitoring requires external integrations

Azure portal dashboard – Query latency, index health, usage metrics at-a-glanceAzure Monitor integration – Azure Monitor and App Insights for custom alertsAPI log exports – Metrics exportable via API for compliance, analysis reports

Real-time dashboard – Query volumes, token usage, response timesCustomer Intelligence – User behavior patterns, popular queries, knowledge gapsConversation analytics – Full transcripts, resolution rates, common questionsExport capabilities – API export to BI tools and data warehouses

Discord Community – 21,691+ members for API discussions, troubleshooting, Maker SpotlightCohere Labs – 4,500+ research community, 100+ publications including Aya (101 languages)Interactive Documentation – docs.cohere.com with 'Try it' testing, Playground exportEnterprise Support – Dedicated account management, custom deployment, bespoke pricing⚠️ NO Live Chat/Phone – Standard customers use Discord and email only

Microsoft network – Comprehensive docs, forums, technical guides backed by MicrosoftEnterprise support – Dedicated channels and SLA-backed help for enterprise plansAzure ecosystem – Broad partner network and active developer community access

Comprehensive docs – Tutorials, cookbooks, API referencesEmail + in-app support – Under 24hr response timePremium support – Dedicated account managers for Premium/EnterpriseOpen-source SDK – Python SDK, Postman, GitHub examples5,000+ Zapier apps – CRMs, e-commerce, marketing integrations

No- Code Interface & Usability

Playground – Visual model testing with parameter tuning, SDK code exportDataset Upload UI – No-code dataset upload for fine-tuning via dashboard⚠️ NO Visual Agent Builder – Agent creation requires Python SDK, not for non-technical users

Azure portal UI – Straightforward index management and settings configuration interfaceLow-code options – PowerApps, Logic Apps connectors enable quick non-dev integration⚠️ Technical complexity – Advanced indexing tweaks require developer expertise vs turnkey tools

2-minute deployment – Fastest time-to-value in the industryWizard interface – Step-by-step with visual previewsDrag-and-drop – Upload files, paste URLs, connect cloud storageIn-browser testing – Test before deploying to productionZero learning curve – Productive on day one

Enterprise Deployment Flexibility ( Core Differentiator)

SaaS (Instant) – Immediate setup via Cohere API with global infrastructureMulti-Cloud Support – AWS Bedrock, Azure, GCP, Oracle OCI, cloud-agnostic portabilityVPC Deployment – <1 day setup within customer private cloud for isolationAir-Gapped/On-Premises – Full private deployment, ZERO Cohere data access✅ Unmatched Among Providers – OpenAI, Anthropic, Google lack comparable on-premise options

N/A

N/A

Grounded Generation with Citations ( Core Differentiator)

Inline Citations – Responses show exact document spans informing each answerFine-Grained Attribution – Citations link specific sentences/paragraphs vs generic referencesRerank 3.5 Integration – 128K context filters emails, tables, JSON to passagesNative RAG API – documents parameter enables grounded generation without external orchestration✅ Competitive Advantage – Most platforms need custom citation, Cohere provides built-in

N/A

N/A

Multimodal Embed v4.0 ( Differentiator)

Text + Images – Single vectors combining text/images eliminate extraction pipelines96 Images Per Batch – Embed Jobs API handles large-scale multimodal processingMatryoshka Learning – Flexible dimensionality (256/512/1024/1536) for cost-performance optimizationBinary Embeddings – 8x storage reduction for large vector databases, minimal loss✅ Top-Tier Benchmarks – MTEB 64.5, BEIR 55.9 among 90+ models

N/A

N/A

Command A – 23 optimized languages: English, French, Spanish, German, Japanese, Korean, ChineseEmbed and Rerank – 100+ languages with cross-lingual retrieval, no translationAya Research Model – Cohere Labs open research covering 101 languages

N/A

N/A

R A G-as-a- Service Assessment

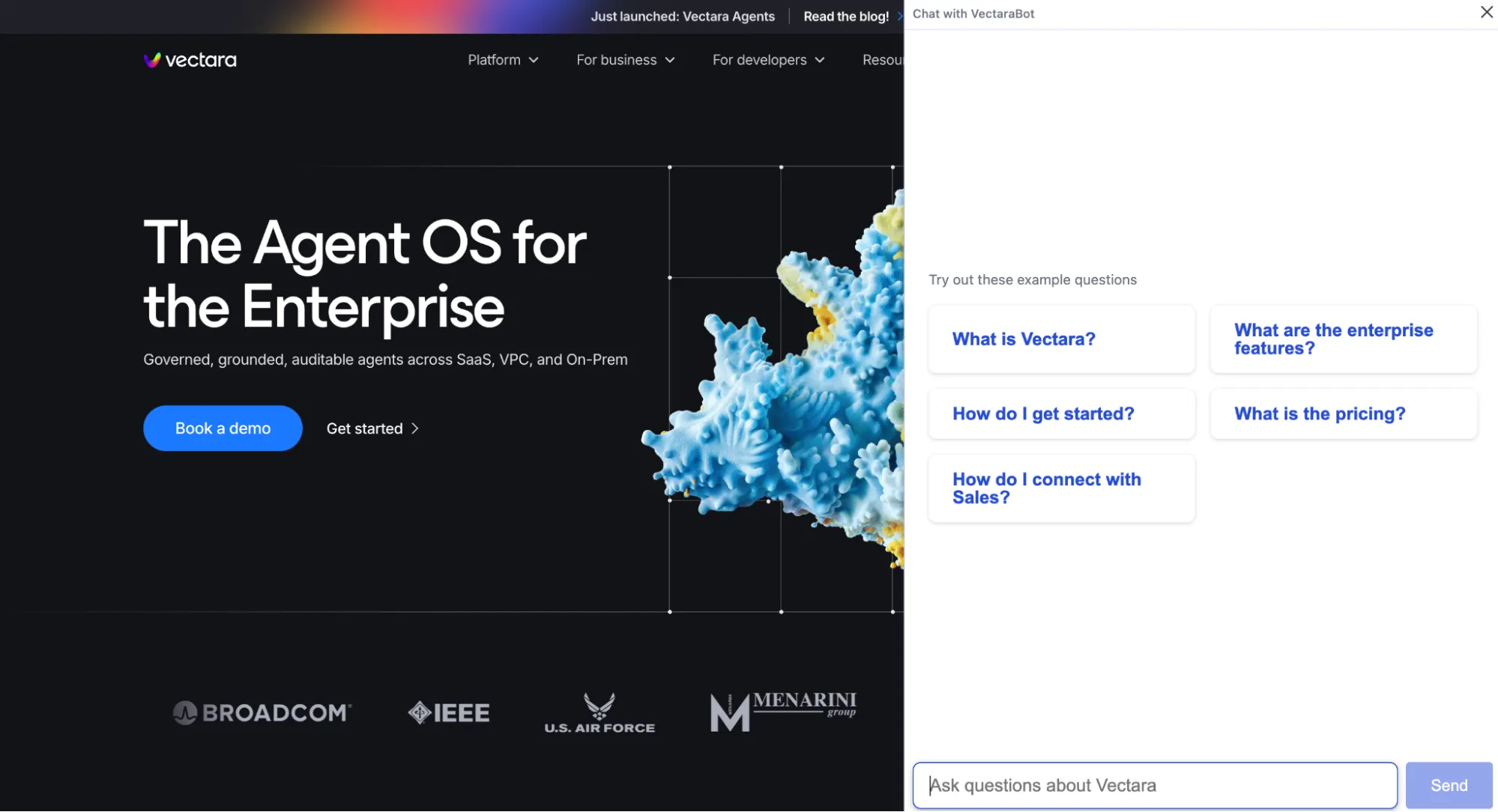

Platform Type – TRUE RAG-AS-A-SERVICE API PLATFORM for custom developer solutionsAPI-First Architecture – REST API v2 + 4 SDKs (Python, TypeScript, Java, Go)RAG Technology Leadership – Embed v4.0 (multimodal), Rerank 3.5 (128K), inline citationsDeployment Flexibility – SaaS, VPC, air-gapped on-premise, unmatched among major providers⚠️ CRITICAL GAPS – NO chat widgets, messaging integrations, visual builders, analytics

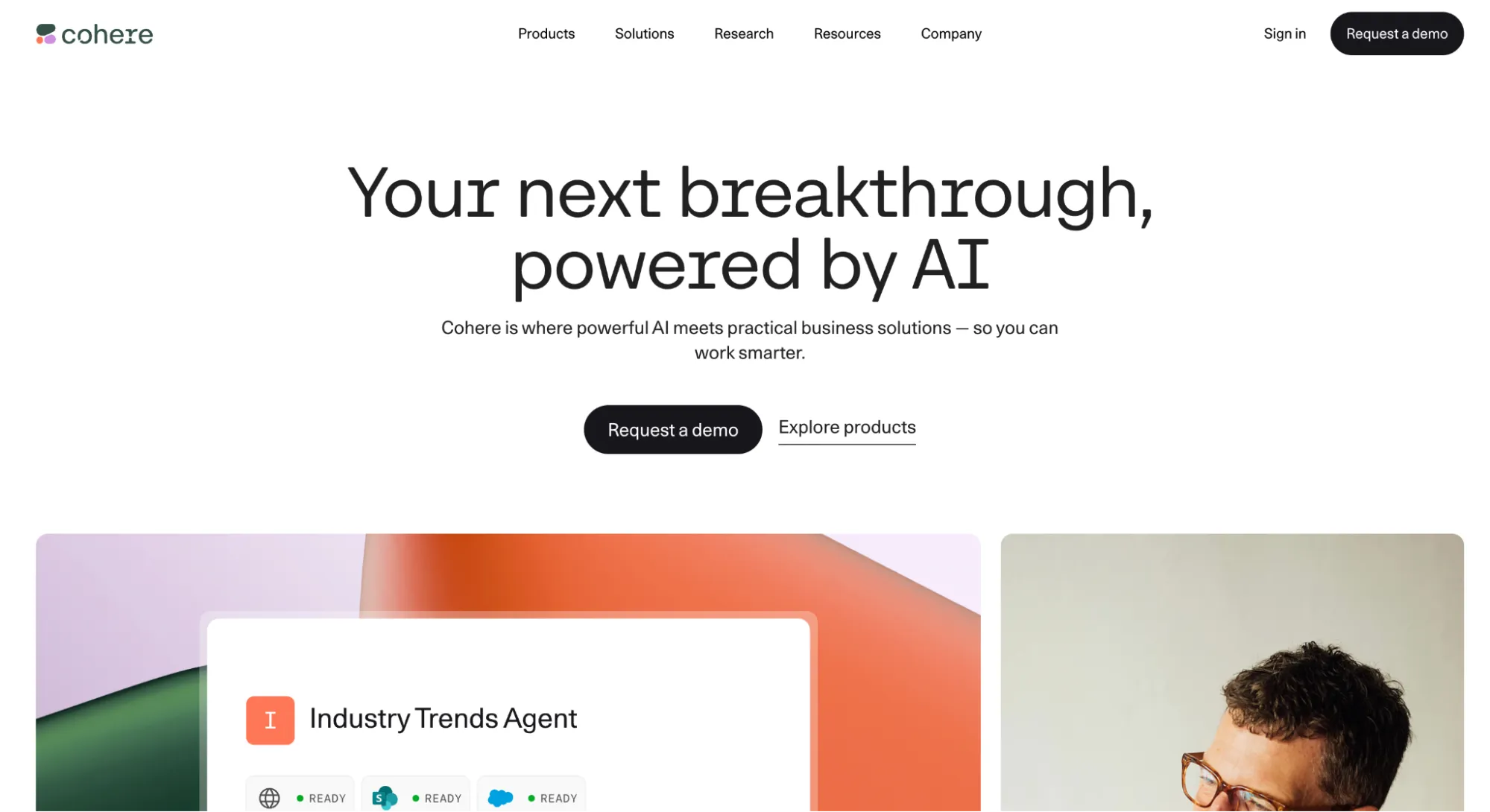

Platform Type – TRUE ENTERPRISE RAG-AS-A-SERVICE: Agent OS for trusted AICore Mission – Deploy AI assistants/agents with grounded answers, safe actions, always-on governanceTarget Market – Enterprises needing production RAG, white-label APIs, VPC/on-prem deploymentsRAG Implementation – Mockingbird LLM (26% better than GPT-4), hybrid search, multi-stage rerankingAPI-First Architecture – REST APIs, SDKs (C#/Python/Java/JS), Azure integration (Logic Apps/Power BI)Security & Compliance – SOC 2 Type 2, ISO 27001, GDPR, HIPAA, BYOK, VPC/on-premAgent-Ready Platform – Python library, Agent APIs, structured outputs, audit trails, policy enforcementAdvanced RAG Features – Hybrid search, reranking, HHEM scoring, multilingual retrieval (7 languages)Funding – $53.5M raised ($25M Series A July 2024, FPV/Race Capital)⚠️ Enterprise complexity – Requires developer expertise for indexing, tuning, agent configuration⚠️ No no-code builder – Azure portal management but no drag-and-drop chatbot builder⚠️ Azure ecosystem focus – Best with Azure, less smooth for AWS/GCP cross-cloud flexibilityUse Case Fit – Mission-critical RAG, regulated industries (SOC 2/HIPAA), white-label APIs, VPC/on-prem

Platform type – TRUE RAG-AS-A-SERVICE with managed infrastructureAPI-first – REST API, Python SDK, OpenAI compatibility, MCP ServerNo-code option – 2-minute wizard deployment for non-developersHybrid positioning – Serves both dev teams (APIs) and business users (no-code)Enterprise ready – SOC 2 Type II, GDPR, WCAG 2.0, flat-rate pricing

Market Position – Enterprise-first RAG API platform with unmatched deployment flexibilityDeployment Differentiator – Air-gapped on-premise, ZERO Cohere access vs SaaS-only competitorsSecurity Leadership – SOC 2 + ISO 27001 + ISO 42001 (rare AI certification) + GDPRCost Optimization – Command R7B 66x cheaper than A, model-to-use-case matchingResearch Pedigree – Founded by Transformer co-author Gomez, $1.54B funding (RBC, Dell, Oracle)

Market position – Enterprise RAG platform between Azure AI Search and chatbot buildersTarget customers – Enterprises needing production RAG, white-label APIs, VPC/on-prem deploymentsKey competitors – Azure AI Search, Coveo, OpenAI Enterprise, Pinecone AssistantCompetitive advantages – Mockingbird LLM, hallucination detection, SOC 2/HIPAA compliance, millisecond responsesPricing advantage – Usage-based with free tier, best value for enterprise RAG infrastructureUse case fit – Mission-critical RAG, white-label APIs, Azure integration, high-accuracy requirements

Market position – Leading RAG platform balancing enterprise accuracy with no-code usability. Trusted by 6,000+ orgs including Adobe, MIT, Dropbox.Key differentiators – #1 benchmarked accuracy • 1,400+ formats • Full white-labeling included • Flat-rate pricingvs OpenAI – 10% lower hallucination, 13% higher accuracy, 34% fastervs Botsonic/Chatbase – More file formats, source citations, no hidden costsvs LangChain – Production-ready in 2 min vs weeks of development

Customer Base & Case Studies

Financial Services – RBC (Royal Bank of Canada) for banking knowledge and complianceEnterprise IT – Dell for knowledge management, Oracle for database docsGlobal Operations – LG Electronics using multilingual capabilities for global operations$1.54B Funding – Nvidia, Salesforce, Oracle, AMD, Schroders, Fujitsu investments

N/A

N/A

Command A – 256K context, $2.50/$10, 75% faster than GPT-4oCommand R+/R/R7B – 128K context, pricing from $0.0375 to $10 per 1M66x Cost Difference – Command R7B output 66x cheaper than Command A23 Optimized Languages – English, French, Spanish, German, Japanese, Korean, Chinese, Arabic

✅ Mockingbird LLM – 26% better than GPT-4 on BERT F1, 0.9% hallucination rate✅ Mockingbird 2 – 7 languages (EN/ES/FR/AR/ZH/JA/KO), under 10B parametersGPT-4/GPT-3.5 fallback – Azure OpenAI integration for OpenAI model preferenceHHEM + HCM – Hughes Hallucination Evaluation with Correction Model (Mockingbird-2-Echo)✅ No training on data – Customer data never used for model training/improvementCustom prompts – Templates configurable for tone, format, citation rules per domain

OpenAI – GPT-5.1 (Optimal/Smart), GPT-4 seriesAnthropic – Claude 4.5 Opus/Sonnet (Enterprise)Auto-routing – Intelligent model selection for cost/performanceManaged – No API keys or fine-tuning required

Grounded Generation Built-In – Native documents parameter with fine-grained inline citationsEmbed v4.0 Multimodal – Text + images in single vectors, 96 images/batchTop-Tier Embeddings – MTEB 64.5, BEIR 55.9, Matryoshka (256/512/1024/1536 dim)Rerank 3.5 – 128K token context handles documents, emails, tables, JSON, codeBinary Embeddings – 8x storage reduction (1024 dim → 128 bytes) minimal loss

✅ Hybrid search – Semantic vector + BM25 keyword matching for pinpoint accuracy✅ Advanced reranking – Multi-stage pipeline optimizes results before generation with relevance scoring✅ Factual scoring – HHEM provides reliability score for every response's grounding quality✅ Citation precision – Mockingbird outperforms GPT-4 on citation metrics, traceable to sourcesMultilingual RAG – Cross-lingual: query/retrieve/generate in different languages (7 supported)Structured outputs – Extract specific information for autonomous agent integration, deterministic data

GPT-4 + RAG – Outperforms OpenAI in independent benchmarksAnti-hallucination – Responses grounded in your content onlyAutomatic citations – Clickable source links in every responseSub-second latency – Optimized vector search and cachingScale to 300M words – No performance degradation at scale

Financial Services – RBC deployment for banking knowledge, compliance, North for BankingHealthcare – Ensemble Health for clinical knowledge (HIPAA verification required)Enterprise IT – Dell for knowledge management, customer support, documentation searchTechnology Companies – Oracle (database docs), LG Electronics (multilingual operations)

Regulated industries – Health, legal, finance needing accuracy, security, SOC 2 complianceEnterprise knowledge – Q&A systems with precise answers from large document repositoriesAutonomous agents – Structured outputs for deterministic data extraction, decision-making workflowsWhite-label APIs – Customer-facing search/chat with millisecond responses at enterprise scaleMultilingual support – 7 languages with single knowledge base for multiple localesHigh accuracy needs – Citation precision, factual scoring, 0.9% hallucination rate (Mockingbird-2-Echo)

Customer support – 24/7 AI handling common queries with citationsInternal knowledge – HR policies, onboarding, technical docsSales enablement – Product info, lead qualification, educationDocumentation – Help centers, FAQs with auto-crawlingE-commerce – Product recommendations, order assistance

Free Tier – Trial API key with 20 chat/min, 1,000 calls/monthProduction Pay-Per-Token – Command A $2.50/$10, R7B $0.0375/$0.15 (66x cheaper output)Production Unlimited Monthly – No monthly caps, 500 chat/min rate limit

30-day free trial – Full enterprise feature access for evaluation before commitmentUsage-based pricing – Pay for query volume and data size with scalable tiersFree tier – Generous free tier for development, prototyping, small production deploymentsEnterprise pricing – Custom pricing for VPC/on-prem installations, heavy usage bundles available✅ Transparent pricing – No per-seat charges, storage surprises, or model switching feesFunding – $53.5M raised ($25M Series A July 2024, FPV/Race Capital)

Standard: $99/mo – 10 chatbots, 60M words, 5K items/botPremium: $449/mo – 100 chatbots, 300M words, 20K items/botEnterprise: Custom – SSO, dedicated support, custom SLAs7-day free trial – Full Standard access, no chargesFlat-rate pricing – No per-query charges, no hidden costs

Limitations & Considerations

Developer-First Platform – Optimized for teams with coding skills, NOT business usersNO Visual Agent Builder – Agent creation requires Python SDK, not for non-technical usersNO Native Messaging/Widget – NO Slack, WhatsApp, Teams, embeddable chat needs custom developmentHIPAA Gap – No explicit certification, healthcare needs sales verificationNOT Ideal For – SMBs without dev resources, teams needing visual builders/messaging

⚠️ Azure ecosystem focus – Best with Azure services, less smooth for AWS/GCP organizations⚠️ Developer expertise needed – Advanced indexing requires technical skills vs turnkey no-code tools⚠️ No drag-and-drop GUI – Azure portal management but no chatbot builder like Tidio/WonderChat⚠️ Limited model selection – Mockingbird/GPT-4/GPT-3.5 only, no Claude/Gemini/custom models⚠️ Sales-driven pricing – Contact sales for enterprise pricing, less transparent than self-serve platforms⚠️ Overkill for simple bots – Enterprise RAG unnecessary for basic FAQ or customer service

Managed service – Less control over RAG pipeline vs build-your-ownModel selection – OpenAI + Anthropic only; no Cohere, AI21, open-sourceReal-time data – Requires re-indexing; not ideal for live inventory/pricesEnterprise features – Custom SSO only on Enterprise plan

Chat API – Multi-turn dialog with state/memory of previous turns for contextRetrieval-Augmented Generation (RAG) – Document mode specifies which documents to referenceGenerative AI Extraction – Automatically extracts answers from responses for reuseIntent-Based AI – Beyond keyword search, surfaces relevant snippets for plain English

Vector + LLM search – Smart retrieval with generative answers, context-aware responsesMockingbird LLM – Proprietary model with source citations (details )Multi-turn conversations – Conversation history tracking for smooth back-and-forth dialogue

✅ #1 accuracy – Median 5/5 in independent benchmarks, 10% lower hallucination than OpenAI✅ Source citations – Every response includes clickable links to original documents✅ 93% resolution rate – Handles queries autonomously, reducing human workload✅ 92 languages – Native multilingual support without per-language config✅ Lead capture – Built-in email collection, custom forms, real-time notifications✅ Human handoff – Escalation with full conversation context preserved

Customization & Flexibility ( Behavior & Knowledge) N/A

Indexing control – Configure chunk sizes, metadata tags, retrieval parametersSearch weighting – Tune semantic vs lexical search balance per queryDomain tuning – Adjust prompt templates and relevance thresholds for specialty domains

Live content updates – Add/remove content with automatic re-indexingSystem prompts – Shape agent behavior and voice through instructionsMulti-agent support – Different bots for different teamsSmart defaults – No ML expertise required for custom behavior

Additional Considerations N/A

✅ Factual scoring – Hybrid search with reranking provides unique factual-consistency scoresFlexible deployment – Public cloud, VPC, or on-prem for varied compliance needsActive development – Regular feature releases and integrations keep platform current

Time-to-value – 2-minute deployment vs weeks with DIYAlways current – Auto-updates to latest GPT modelsProven scale – 6,000+ organizations, millions of queriesMulti-LLM – OpenAI + Claude reduces vendor lock-in

N/A

✅ SOC 2 Type 2 – Independent audit demonstrating enterprise-grade operational security controls✅ ISO 27001 + GDPR – Information security management with EU data protection compliance✅ HIPAA ready – Healthcare compliance with BAAs available for PHI handling✅ Encryption – TLS 1.3 in transit, AES-256 at rest with BYOK support✅ Zero data retention – No model training on customer data, content stays privatePrivate deployments – VPC or on-premise for data sovereignty and network isolation

SOC 2 Type II + GDPR – Regular third-party audits, full EU compliance256-bit AES encryption – Data at rest; SSL/TLS in transitSSO + 2FA + RBAC – Enterprise access controls with role-based permissionsData isolation – Never trains on customer dataDomain allowlisting – Restrict chatbot to approved domains

N/A

Enterprise support – Dedicated channels and SLA-backed help for enterprise customersMicrosoft network – Extensive infrastructure, forums, technical guides backed by MicrosoftComprehensive docs – API references, integration guides, SDKs at docs.vectara.comSample code – Pre-built examples, Jupyter notebooks, quick-start guides for rapid integrationActive community – Developer forums for peer support, knowledge sharing, best practices

Documentation hub – Docs, tutorials, API referencesSupport channels – Email, in-app chat, dedicated managers (Premium+)Open-source – Python SDK, Postman, GitHub examplesCommunity – User community + 5,000 Zapier integrations

Join the Discussion

Loading comments...