Data Ingestion & Knowledge Sources

File Support – PDF, Word, text, JSON, YAML, CSV; full website crawlingCloud Integrations – Native Google Drive, Notion, Confluence, Guru (⚠️ no Dropbox)Chat2KB (Growth/Enterprise) – Auto-extracts Q&A from conversations with conflict resolutionReal-time Updates – Starter 50 docs → Growth 1K → Enterprise unlimited⚠️ YouTube transcripts NOT supported – LLMs "not great at video interpretation"

Handles 40 + formats—from PDFs and spreadsheets to audio—at massive scale

Reference .

Async ingest auto-scales, crunching millions of tokens per second—perfect for giant corpora

Benchmark details .

Ingest via code or API, so you can tap proprietary databases or custom pipelines with ease.

1,400+ file formats – PDF, DOCX, Excel, PowerPoint, Markdown, HTML + auto-extraction from ZIP/RAR/7Z archivesWebsite crawling – Sitemap indexing with configurable depth for help docs, FAQs, and public contentMultimedia transcription – AI Vision, OCR, YouTube/Vimeo/podcast speech-to-text built-inCloud integrations – Google Drive, SharePoint, OneDrive, Dropbox, Notion with auto-syncKnowledge platforms – Zendesk, Freshdesk, HubSpot, Confluence, Shopify connectorsMassive scale – 60M words (Standard) / 300M words (Premium) per bot with no performance degradation

20+ Native Helpdesk Integrations – Zendesk, Intercom, Salesforce, Front, Gorgias, HubSpot (⚠️ no Zapier)Omnichannel – Slack, Discord, Teams; WhatsApp/Messenger via Zendesk/Intercom (⚠️ not Telegram)Website Options – Fini Widget, Search Bar, Standalone; Chrome Extension for agents

Ships a REST RAG API—plug it into websites, mobile apps, internal tools, or even legacy systems.

No off-the-shelf chat widget; you wire up your own front end

API snippet .

Website embedding – Lightweight JS widget or iframe with customizable positioningCMS plugins – WordPress, WIX, Webflow, Framer, SquareSpace native support5,000+ app ecosystem – Zapier connects CRMs, marketing, e-commerce toolsMCP Server – Integrate with Claude Desktop, Cursor, ChatGPT, WindsurfOpenAI SDK compatible – Drop-in replacement for OpenAI API endpointsLiveChat + Slack – Native chat widgets with human handoff capabilities

Sophie AI Agent – 5-layer execution: Safety, LLM Supervisor, Skills, Feedback, Traceability100+ Languages – Locale-based routing with real-time translationHuman Handoff – Context-preserving escalation via keywords, sentiment, confidence thresholds✅ 80% Ticket Resolution – End-to-end without human intervention claim

Core RAG engine serves retrieval-grounded answers; hook it to your UI for multi-turn chat.

Multi-lingual if the LLM you pick supports it.

Lead-capture or human handoff flows are yours to build through the API.

✅ #1 accuracy – Median 5/5 in independent benchmarks, 10% lower hallucination than OpenAI✅ Source citations – Every response includes clickable links to original documents✅ 93% resolution rate – Handles queries autonomously, reducing human workload✅ 92 languages – Native multilingual support without per-language config✅ Lead capture – Built-in email collection, custom forms, real-time notifications✅ Human handoff – Escalation with full conversation context preserved

GUI Widget Editor – Logo, colors, title, messages, FAQs (⚠️ CSS not documented)White-Labeling – Custom domain (CNAME), full logo replacement, agent identity renaming100+ Tone Options – Friendly, Professional, TaxAssistant, Finance advisor, Casual, politeDynamic Routing – User context (VIP, first-time, veteran) for metadata-driven personalization

Fully bespoke—design any UI you want and skin it to match your brand.

SciPhi focuses on the back end, so front-end look-and-feel is entirely up to you.

Full white-labeling included – Colors, logos, CSS, custom domains at no extra cost2-minute setup – No-code wizard with drag-and-drop interfacePersona customization – Control AI personality, tone, response style via pre-promptsVisual theme editor – Real-time preview of branding changesDomain allowlisting – Restrict embedding to approved sites only

Starter (Free) – GPT-4o mini onlyGrowth – GPT-4o mini + ClaudeEnterprise – GPT-4o + Multi-layer automatic routing per query partRAGless Architecture – Query-writing AI; "no embeddings, no hallucinations"⚠️ No Runtime Switching – Plan-based selection only

LLM-agnostic—GPT-4, Claude, Llama 2, you choose.

Pick, fine-tune, or swap models anytime to balance cost and performance

Model options .

GPT-5.1 models – Latest thinking models (Optimal & Smart variants)GPT-4 series – GPT-4, GPT-4 Turbo, GPT-4o availableClaude 4.5 – Anthropic's Opus available for EnterpriseAuto model routing – Balances cost/performance automaticallyZero API key management – All models managed behind the scenes

Developer Experience ( A P I & S D Ks)

Base URL – https://api-prod.usefini.com (v2, Bearer Token auth)Core Endpoints – /v2/bots/ask-question, /v2/bots/links/*, feedback, chat history⚠️ NO Official SDKs – Only Python and Node.js examples

Documentation Quality – 3/5 completeness, 2/5 error handling, 1/5 rate limitsParamount – Open-source tool (github.com/ask-fini/paramount) for accuracy measurement

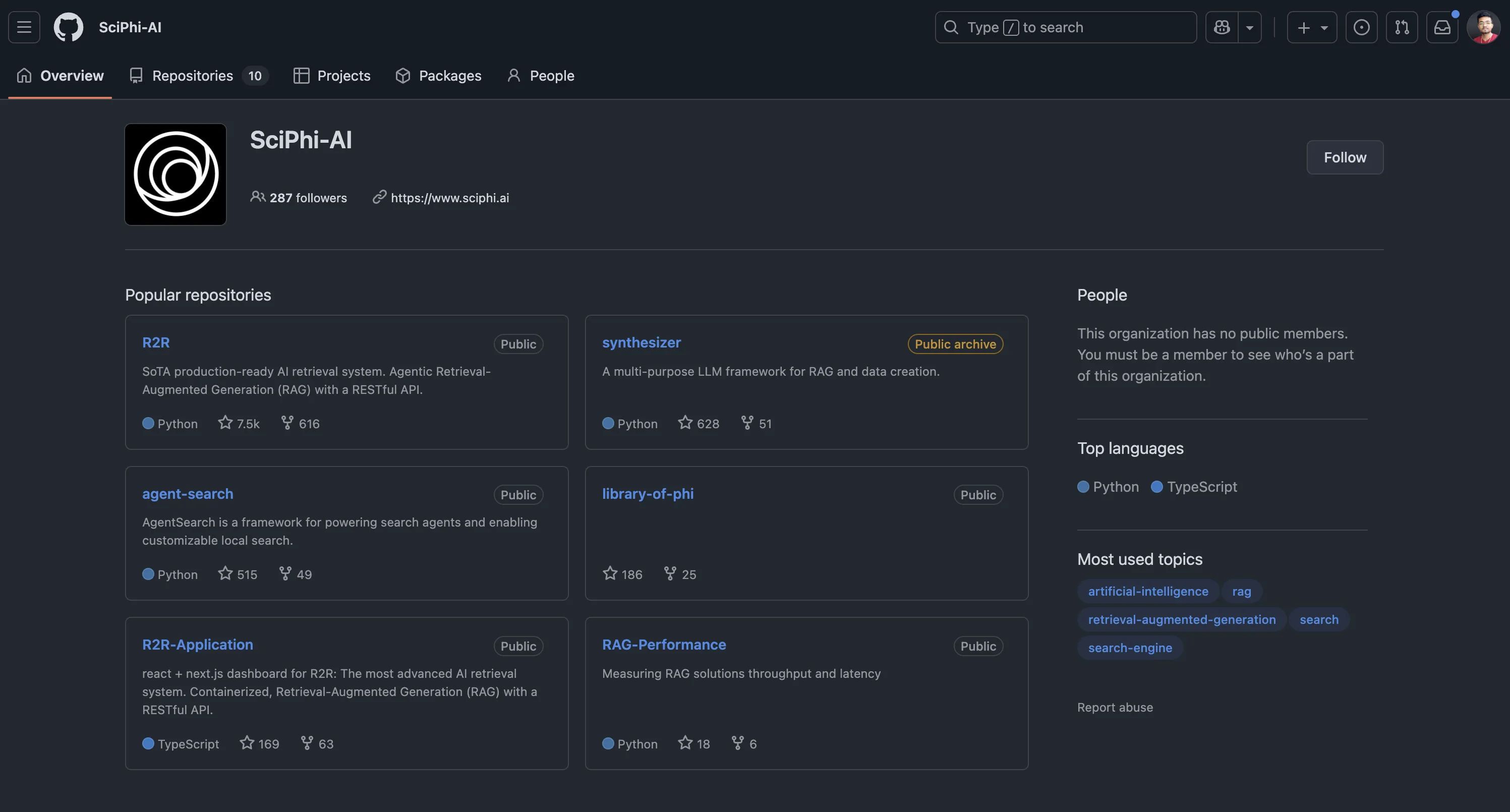

REST API plus a Python client (R2RClient) handle ingest and query tasks

Docs and GitHub repos offer deep dives and open-source starter code

SciPhi GitHub .

REST API – Full-featured for agents, projects, data ingestion, chat queriesPython SDK – Open-source customgpt-client with full API coveragePostman collections – Pre-built requests for rapid prototypingWebhooks – Real-time event notifications for conversations and leadsOpenAI compatible – Use existing OpenAI SDK code with minimal changes

✅ 97-98% Accuracy Claim – Column Tax (94%, 98% resolved), Qogita (90%, 121% SLA)

6 Hallucination Prevention – RAGless, LLM filtering, confidence gating, guardrails, skill modulesAccuracy Tools – Sophia AI Evaluator (Growth/Enterprise), Paramount, CXACT Benchmarking✅ 80% Ticket Resolution – End-to-end without human intervention

Hybrid search (dense + keyword) keeps retrieval fast and sharp.

Knowledge-graph boosts (HybridRAG) drive up to 150 % better accuracy

Sub-second latency—even at enterprise scale.

Sub-second responses – Optimized RAG with vector search and multi-layer cachingBenchmark-proven – 13% higher accuracy, 34% faster than OpenAI Assistants APIAnti-hallucination tech – Responses grounded only in your provided contentOpenGraph citations – Rich visual cards with titles, descriptions, images99.9% uptime – Auto-scaling infrastructure handles traffic spikes

Customization & Flexibility ( Behavior & Knowledge)

Guidelines System – Tone, phrases, forbidden terms, formatting, response lengthBot Management – Starter 2 bots → Growth/Enterprise unlimitedReal-time Learning – Chat2KB auto-learning (MECE), Flows for specialized workflowsDynamic Personalization – User context from backend, segment-based routing

Add new sources, tweak retrieval, mix collections—everything’s programmable.

Chain API calls, re-rank docs, or build full agentic flows

Live content updates – Add/remove content with automatic re-indexingSystem prompts – Shape agent behavior and voice through instructionsMulti-agent support – Different bots for different teamsSmart defaults – No ML expertise required for custom behavior

⚠️ Pricing NOT Publicly Disclosed – Requires sales contact

Starter (Free) – GPT-4o mini, ~50 questions/month, ~50 docs, 2 botsGrowth (est. $999/mo) – GPT-4o mini/Claude, 1K docs, unlimited users, SOC 2, RBAC, Chat2KBEnterprise (Custom) – GPT-4o, Multi-layer models, unlimited docs, AI Actions, white-glove onboarding✅ Zero-Pay Guarantee – Only pay if >80% accuracy met

Free tier plus a $25/mo Dev tier for experiments.

Enterprise plans with custom pricing and self-hosting for heavy traffic

Pricing .

Standard: $99/mo – 60M words, 10 botsPremium: $449/mo – 300M words, 100 botsAuto-scaling – Managed cloud scales with demandFlat rates – No per-query charges

✅ Certifications – SOC 2 Type II (zero findings), ISO 27001, ISO 42001, GDPR

⚠️ HIPAA Conflicting – Marketing claims vs. case study "next up" (verify)

⚠️ PCI DSS – Claimed but not on official security section (verify)

PII Shield – Auto-masks SSN, passport, license, taxpayer ID, credit cardsEncryption – AES-256 at rest, TLS 1.3 in transit; "no training" policyAccess Controls – RBAC (Growth/Enterprise), SSO, audit logging, EU/US data residency

Customer data stays isolated in SciPhi Cloud; self-host for full control.

Standard encryption in transit and at rest; tune self-hosted setups to meet any regulation.

SOC 2 Type II + GDPR – Third-party audited complianceEncryption – 256-bit AES at rest, SSL/TLS in transitAccess controls – RBAC, 2FA, SSO, domain allowlistingData isolation – Never trains on your data

Observability & Monitoring

Fini 2.0 (Jan 2025) – AI resolution, quality, confidence, CSAT, agent productivity, drop-off analysisChat History (Feb 2025) – Centralized view with filtering; CSV/JSON export for Looker/TableauAI Categorization – Auto-tags by topic (returns, login, pricing, shipping)Knowledge Gap Analysis – Identifies unanswerable questions with improvement suggestions

Dev dashboard shows real-time logs, latency, and retrieval quality

Dashboard .

Hook into Prometheus, Grafana, or other tools for deep monitoring.

Real-time dashboard – Query volumes, token usage, response timesCustomer Intelligence – User behavior patterns, popular queries, knowledge gapsConversation analytics – Full transcripts, resolution rates, common questionsExport capabilities – API export to BI tools and data warehouses

Founding Team – Ex-Uber engineers; CEO led 4M+ interactions/month at UberBacked By – Y Combinator S22 ($125K), Matrix Partners, angels from Uber/Intercom/SoftbankCustomers – HackerRank, Qogita, Column Tax, Bitdefender, Duolingo, Meesho, TrainingPeaksImplementation – 60-day program; Enterprise gets dedicated AI engineers, 24/7 Slack

Community help via Discord and GitHub; Enterprise customers get dedicated support

Open-source core encourages community contributions and integrations.

Comprehensive docs – Tutorials, cookbooks, API referencesEmail + in-app support – Under 24hr response timePremium support – Dedicated account managers for Premium/EnterpriseOpen-source SDK – Python SDK, Postman, GitHub examples5,000+ Zapier apps – CRMs, e-commerce, marketing integrations

Additional Considerations

RAGless Positioning – Criticizes RAG as "search engines" claiming "will become obsolete"Action-Taking Focus – Actions vs. information ("Done! Refund processed" vs. "Find details here")Target Customer – Enterprise B2C high-volume (fintech, e-commerce, healthcare)vs. Intercom Fin – Claims 95%+ accuracy vs. ~80%; platform agnostic⚠️ Less Suitable For – General Q&A, content generation, standalone chatbots

Advanced extras like GraphRAG and agentic flows push beyond basic Q&A

Great fit for enterprises needing deeply customized, fully integrated AI solutions.

Time-to-value – 2-minute deployment vs weeks with DIYAlways current – Auto-updates to latest GPT modelsProven scale – 6,000+ organizations, millions of queriesMulti-LLM – OpenAI + Claude reduces vendor lock-in

No- Code Interface & Usability

✅ Time to Go Live – "2 minutes" setup, <1 week full integration, 1-2 weeks Enterprise

No-Code Deployment – Widget (JS snippet), Search Bar, Standalone, native helpdesk one-click, Chrome ExtensionAdmin Dashboard – Agent creation, Knowledge Hub (Notion/Confluence/Drive), Prompt Configurator (escalation, guardrails)Pre-Built Templates – E-commerce, fintech, SaaS onboarding workflows

No no-code UI—built for devs to wire into their own front ends.

Dashboard is utilitarian: good for testing and monitoring, not for everyday business users.

2-minute deployment – Fastest time-to-value in the industryWizard interface – Step-by-step with visual previewsDrag-and-drop – Upload files, paste URLs, connect cloud storageIn-browser testing – Test before deploying to productionZero learning curve – Productive on day one

Market Position – Agentic AI for customer support; Sophie's 5-layer + RAGless claiming 97-98% accuracyKey Competitors – Intercom Fin, Zendesk Answer Bot, Ada, Ultimate.ai, traditional RAG chatbots✅ Competitive Advantages – 97-98% accuracy vs. ~80%, 20+ native integrations, RAGless, 100+ languages, Zero-Pay Guarantee

Best Value For – Enterprises prioritizing accuracy, action-taking AI, regulated industries (fintech, healthcare)

Market position – Developer-first RAG infrastructure combining open-source flexibility with managed cloud serviceTarget customers – Dev teams needing high-performance RAG, enterprises requiring millions tokens/second ingestionKey competitors – LangChain/LangSmith, Deepset/Haystack, Pinecone Assistant, custom RAG implementationsCompetitive advantages – HybridRAG (150% accuracy boost), async auto-scaling, 40+ formats, sub-second latencyPricing advantage – Free tier + $25/mo Dev plan; open-source foundation enables cost optimizationUse case fit – Massive document volumes, advanced RAG needs, self-hosting control requirements

Market position – Leading RAG platform balancing enterprise accuracy with no-code usability. Trusted by 6,000+ orgs including Adobe, MIT, Dropbox.Key differentiators – #1 benchmarked accuracy • 1,400+ formats • Full white-labeling included • Flat-rate pricingvs OpenAI – 10% lower hallucination, 13% higher accuracy, 34% fastervs Botsonic/Chatbase – More file formats, source citations, no hidden costsvs LangChain – Production-ready in 2 min vs weeks of development

Starter (Free) – GPT-4o mini only (~50 questions/month)Growth – GPT-4o mini + Claude, 1K docs, unlimited usersEnterprise – GPT-4o + Multi-layer automatic routing per query part✅ Target Accuracy – 97-98% claim with human-in-the-loop customization

⚠️ No Manual Switching – Plan-based model selection only

LLM-Agnostic Architecture – GPT-4, GPT-3.5, Claude, Llama 2, and other open-source modelsModel Flexibility – Easy swapping to balance cost/performance without vendor lock-inCustom Support – Configure any LLM via API including fine-tuned or proprietary modelsEmbedding Providers – Multiple embedding options for semantic search and vector generation✅ Full control over temperature, max tokens, and generation parameters

OpenAI – GPT-5.1 (Optimal/Smart), GPT-4 seriesAnthropic – Claude 4.5 Opus/Sonnet (Enterprise)Auto-routing – Intelligent model selection for cost/performanceManaged – No API keys or fine-tuning required

RAGless Architecture – Query-writing AI; "no embeddings, no hallucinations" with precise attribution6-Mechanism Prevention – LLM filtering, confidence gating, guardrails, deterministic skill modulesReal-time Knowledge – Content used immediately after ingestion without retrainingChat2KB (Growth/Enterprise) – Auto-extracts Q&A with MECE classification, conflict resolution✅ Customer Results – Column Tax (94%, 98% resolved), Qogita (90%, 121% SLA)

HybridRAG Technology – Vector search + knowledge graphs for 150% accuracy improvementHybrid Search – Dense vector + keyword with reciprocal rank fusionAgentic RAG – Reasoning agent for autonomous research across documents and webMultimodal Ingestion – 40+ formats (PDFs, spreadsheets, audio) at massive scale✅ Millions of tokens/second async auto-scaling ingestion throughput

✅ Sub-second latency even at enterprise scale with optimized operations

GPT-4 + RAG – Outperforms OpenAI in independent benchmarksAnti-hallucination – Responses grounded in your content onlyAutomatic citations – Clickable source links in every responseSub-second latency – Optimized vector search and cachingScale to 300M words – No performance degradation at scale

✅ Enterprise B2C Support – High-volume fintech, e-commerce, healthcare (80% resolution, 97-98% accuracy)

✅ Action-Taking AI – Autonomous refunds, updates, CRM sync (Salesforce, Stripe, Shopify)

✅ Helpdesk Integration – 20+ native platforms (Zendesk, Intercom, Salesforce, Front) without Zapier

✅ PII-Sensitive Industries – Auto-masking SSN, passport, license, credit cards with PII Shield

⚠️ NOT Suitable For – General Q&A, content generation, no existing helpdesk

Enterprise Knowledge – Process millions of documents with knowledge graph relationshipsSupport Automation – RAG-powered support bots with accurate, grounded responsesResearch & Analysis – Agentic RAG for autonomous research across collections and webCompliance & Legal – Large document repositories with precise citation trackingInternal Docs – Developer-focused RAG for code, API references, technical knowledgeCustom AI Apps – API-first architecture integrates into any application or workflow

Customer support – 24/7 AI handling common queries with citationsInternal knowledge – HR policies, onboarding, technical docsSales enablement – Product info, lead qualification, educationDocumentation – Help centers, FAQs with auto-crawlingE-commerce – Product recommendations, order assistance

✅ SOC 2 Type II – Zero audit findings per Sprinto

✅ ISO 27001 & 42001 – Information security + AI governance

✅ GDPR Compliant – Full data subject rights, EU data residency

⚠️ HIPAA Conflicting – Marketing claims vs. case study "next up" (verify)

⚠️ PCI DSS – Claimed but not on official security page (verify)

"No Training on Data" – OpenAI DPA; PII Shield; AES-256, TLS 1.3

Data Isolation – Single-tenant architecture with isolated customer data in SciPhi CloudSelf-Hosting Option – On-premise deployment for complete data control in regulated industriesEncryption Standards – TLS in transit, AES-256 at rest encryptionAccess Controls – Document-level granular permissions with role-based access control (RBAC)✅ Open-source R2R core enables security audits and compliance validation

✅ Self-hosted deployments tunable for HIPAA, SOC 2, and other regulations

SOC 2 Type II + GDPR – Regular third-party audits, full EU compliance256-bit AES encryption – Data at rest; SSL/TLS in transitSSO + 2FA + RBAC – Enterprise access controls with role-based permissionsData isolation – Never trains on customer dataDomain allowlisting – Restrict chatbot to approved domains

⚠️ Pricing NOT Publicly Disclosed – Requires sales contact

Starter (Free) – GPT-4o mini, ~50 questions/month, ~50 docs, 2 botsGrowth (est. $999/mo) – GPT-4o mini/Claude, 1K docs, unlimited users, SOC 2, RBAC, Chat2KBEnterprise (Custom) – GPT-4o, Multi-layer models, unlimited docs, AI Actions, white-glove onboarding✅ Zero-Pay Guarantee – Only pay if >80% accuracy met (unique risk mitigation)

Free Tier – Generous no-credit-card tier for experimentation and developmentDeveloper Plan – $25/month for individual developers and small projectsEnterprise Plans – Custom pricing based on scale, features, and supportSelf-Hosting – Open-source R2R available free (infrastructure costs only)✅ Flat subscription pricing without per-query or per-document charges

✅ Managed cloud handles infrastructure, deployment, scaling, updates, maintenance

Standard: $99/mo – 10 chatbots, 60M words, 5K items/botPremium: $449/mo – 100 chatbots, 300M words, 20K items/botEnterprise: Custom – SSO, dedicated support, custom SLAs7-day free trial – Full Standard access, no chargesFlat-rate pricing – No per-query charges, no hidden costs

Founding Team – Ex-Uber engineers; CEO led 4M+ interactions/month; Y Combinator S22, Matrix PartnersCustomers – HackerRank, Qogita, Column Tax, Bitdefender, Duolingo, Meesho60-Day Implementation – Discovery → Deployment → Optimization → Production with dedicated managersEnterprise Support – Dedicated AI engineers, CSMs, 24/7 Slack channels⚠️ Documentation Quality – 3/5 completeness, 2/5 error handling, 1/5 rate limits; NO SDKs

Comprehensive Docs – Detailed docs at r2r-docs.sciphi.ai covering all features and endpointsGitHub Repository – Active open-source development at github.com/SciPhi-AI/R2R with code examplesCommunity Support – Discord community and GitHub issues for peer supportEnterprise Support – Dedicated channels for enterprise customers with SLAs✅ Python client (R2RClient) with extensive examples and starter code

✅ Developer dashboard with real-time logs, latency, and retrieval quality metrics

Documentation hub – Docs, tutorials, API referencesSupport channels – Email, in-app chat, dedicated managers (Premium+)Open-source – Python SDK, Postman, GitHub examplesCommunity – User community + 5,000 Zapier integrations

Limitations & Considerations

⚠️ Pricing Opacity – No public pricing creates evaluation friction

⚠️ HIPAA & PCI DSS Unverified – Conflicting claims require verification

⚠️ Documentation Limitations – Basic API docs (3/5, 2/5, 1/5), no SDKs

⚠️ Small Team (14 employees) – Limited capacity vs. enterprise competitors

⚠️ Platform Lock-In – Requires existing helpdesk (Zendesk/Intercom/Salesforce)

✅ Best For – Enterprise B2C high-volume prioritizing 97-98% accuracy, 60-day commitment

⚠️ Developer-Focused – Requires technical expertise to build and wire custom front ends

⚠️ Infrastructure Requirements – Self-hosting needs GPU infrastructure and DevOps expertise

⚠️ Integration Effort – API-first design means building your own chat UI

⚠️ Learning Curve – Advanced features like knowledge graphs require RAG concept understanding

⚠️ Community Support Limits – Open-source support relies on community unless enterprise plan

Managed service – Less control over RAG pipeline vs build-your-ownModel selection – OpenAI + Anthropic only; no Cohere, AI21, open-sourceReal-time data – Requires re-indexing; not ideal for live inventory/pricesEnterprise features – Custom SSO only on Enterprise plan

Sophie AI Agent – Fully autonomous resolving 80% of tickets end-to-end without human intervention5-Layer Execution – Safety Guardrails (40+ filters, PII), LLM Supervisor, Skills, Feedback, TraceabilityMulti-Layer Architecture (Enterprise) – Automatic routing to best LLM per query part; specialized agentsAction-Taking – Autonomous refunds, updates, CRM sync (Salesforce, Stripe, Shopify)✅ 100+ Languages – Automatic translation with locale-based routing

Agentic RAG – Reasoning agent for autonomous research across documents/web with multi-step problem solvingAdvanced Toolset – Semantic search, metadata search, document retrieval, web search, web scraping capabilitiesMulti-Turn Context – Stateful dialogues maintaining conversation history via conversation_id for follow-upsCitation Transparency – Detailed responses with source citations for fact-checking and verification⚠️ No Pre-Built UI – API-first platform requires custom front-end development

⚠️ No Lead Analytics – Lead capture and dashboards must be implemented at application layer

Custom AI Agents – Autonomous GPT-4/Claude agents for business tasksMulti-Agent Systems – Specialized agents for support, sales, knowledgeMemory & Context – Persistent conversation history across sessionsTool Integration – Webhooks + 5,000 Zapier apps for automationContinuous Learning – Auto re-indexing without manual retraining

R A G-as-a- Service Assessment

Platform Type – AGENTIC AI CUSTOMER SUPPORT with RAGless architecture, NOT traditional RAG-as-a-ServiceArchitectural Approach – Query-writing AI; "no embeddings, no hallucinations" with deterministic resultsSophie's 5-Layer Framework – 97-98% accuracy vs. ~80% competitors; Zero-Pay Guarantee⚠️ Developer Experience – Basic REST API (v2), NO SDKs, docs (3/5, 2/5, 1/5)

No-Code Capabilities – "2 minutes" setup, 20+ native helpdesk integrations, "Day 1 Ready-to-Use"⚠️ NOT A RAG PLATFORM – Explicitly positions AGAINST traditional RAG; fundamentally different

⚠️ NOT Suitable For – General Q&A, content generation, no helpdesk, programmatic RAG API needs

Platform Type – HYBRID RAG-AS-A-SERVICE combining open-source R2R with managed SciPhi CloudCore Mission – Bridge experimental RAG models to production-ready systems with deployment flexibilityDeveloper Target – Built for OSS community, startups, enterprises emphasizing developer flexibility and controlRAG Leadership – HybridRAG (150% accuracy), millions tokens/second, 40+ formats, sub-second latency✅ Open-source R2R core on GitHub enables customization, portability, avoids vendor lock-in

⚠️ NO no-code features – No chat widgets, visual builders, pre-built integrations or dashboards

Platform type – TRUE RAG-AS-A-SERVICE with managed infrastructureAPI-first – REST API, Python SDK, OpenAI compatibility, MCP ServerNo-code option – 2-minute wizard deployment for non-developersHybrid positioning – Serves both dev teams (APIs) and business users (no-code)Enterprise ready – SOC 2 Type II, GDPR, WCAG 2.0, flat-rate pricing

Join the Discussion

Loading comments...