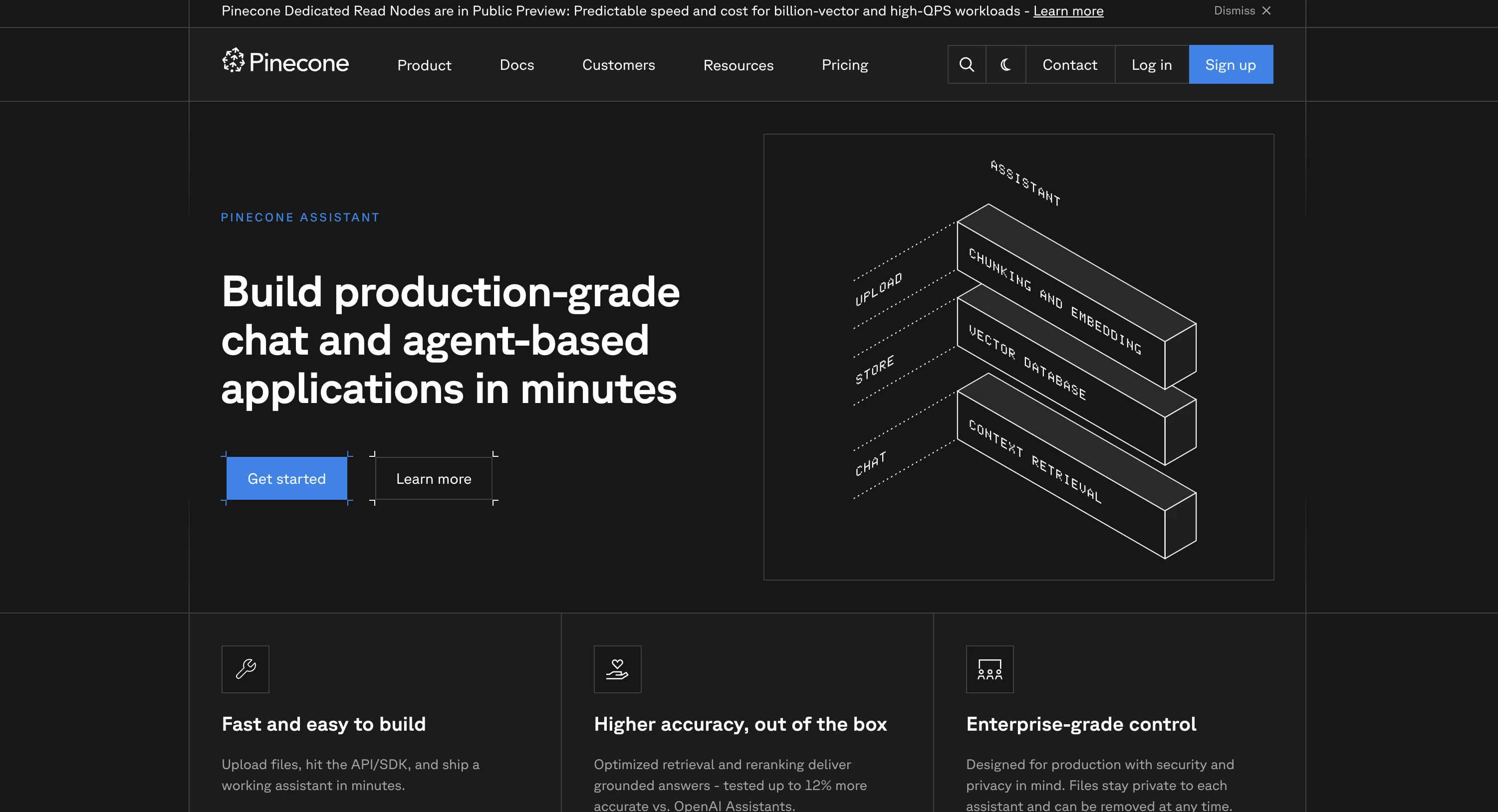

Data Ingestion & Knowledge Sources

✅ File Format Support – PDF, JSON, Markdown, Word, plain text auto-chunked and embedded. [Pinecone Learn] ✅ Automatic Processing – Chunks, embeds, stores uploads in Pinecone index for fast search.✅ Metadata Filtering – Add tags to files for smarter retrieval results. [Metadata] ⚠️ No Native Connectors – No web crawler or Drive connector; push files via API/SDK.✅ Enterprise Scale – Billions of embeddings; preview tier supports 10K files or 10GB per assistant.

✅ Auto-Indexing – Points at files, indexes unstructured data automatically without manual setup✅ Auto-Sync – Connected repositories sync automatically, document changes reflected almost instantlyFile Formats – Supports PDF, DOCX, PPT, TXT and common enterprise formats⚠️ Limited Scope – No website crawling or YouTube ingestion, narrower than CustomGPTEnterprise Scale – Handles large corporate data sets, exact limits not published

1,400+ file formats – PDF, DOCX, Excel, PowerPoint, Markdown, HTML + auto-extraction from ZIP/RAR/7Z archivesWebsite crawling – Sitemap indexing with configurable depth for help docs, FAQs, and public contentMultimedia transcription – AI Vision, OCR, YouTube/Vimeo/podcast speech-to-text built-inCloud integrations – Google Drive, SharePoint, OneDrive, Dropbox, Notion with auto-syncKnowledge platforms – Zendesk, Freshdesk, HubSpot, Confluence, Shopify connectorsMassive scale – 60M words (Standard) / 300M words (Premium) per bot with no performance degradation

⚠️ Backend Service Only – No built-in chat widget or turnkey Slack/Teams integration.Developer-Built Front-Ends – Teams craft custom UIs or integrate via code/Pipedream.REST API Integration – Embed anywhere by hitting endpoints; no one-click Zapier connector.✅ Full Flexibility – Drop into any environment with your own UI and logic.

⚠️ Standalone Only – Own chat/search interface, not a "deploy everywhere" platform⚠️ No External Channels – No Slack bot, Zapier connector, or public APIWeb/Desktop UI – Users interact through Pyx's interface, minimal third-party chat synergyCustom Integration – Deeper integrations require custom dev work or future updates

Website embedding – Lightweight JS widget or iframe with customizable positioningCMS plugins – WordPress, WIX, Webflow, Framer, SquareSpace native support5,000+ app ecosystem – Zapier connects CRMs, marketing, e-commerce toolsMCP Server – Integrate with Claude Desktop, Cursor, ChatGPT, WindsurfOpenAI SDK compatible – Drop-in replacement for OpenAI API endpointsLiveChat + Slack – Native chat widgets with human handoff capabilities

Multi-Turn Q&A – GPT-4 or Claude; stateless conversation requires passing prior messages yourself.⚠️ No Business Extras – No lead capture, handoff, or chat logs; add in app layer.✅ Context-Grounded Answers – Returns cited responses tied to your documents reducing hallucinations.Core Focus – Rock-solid retrieval plus response; business features in your codebase.

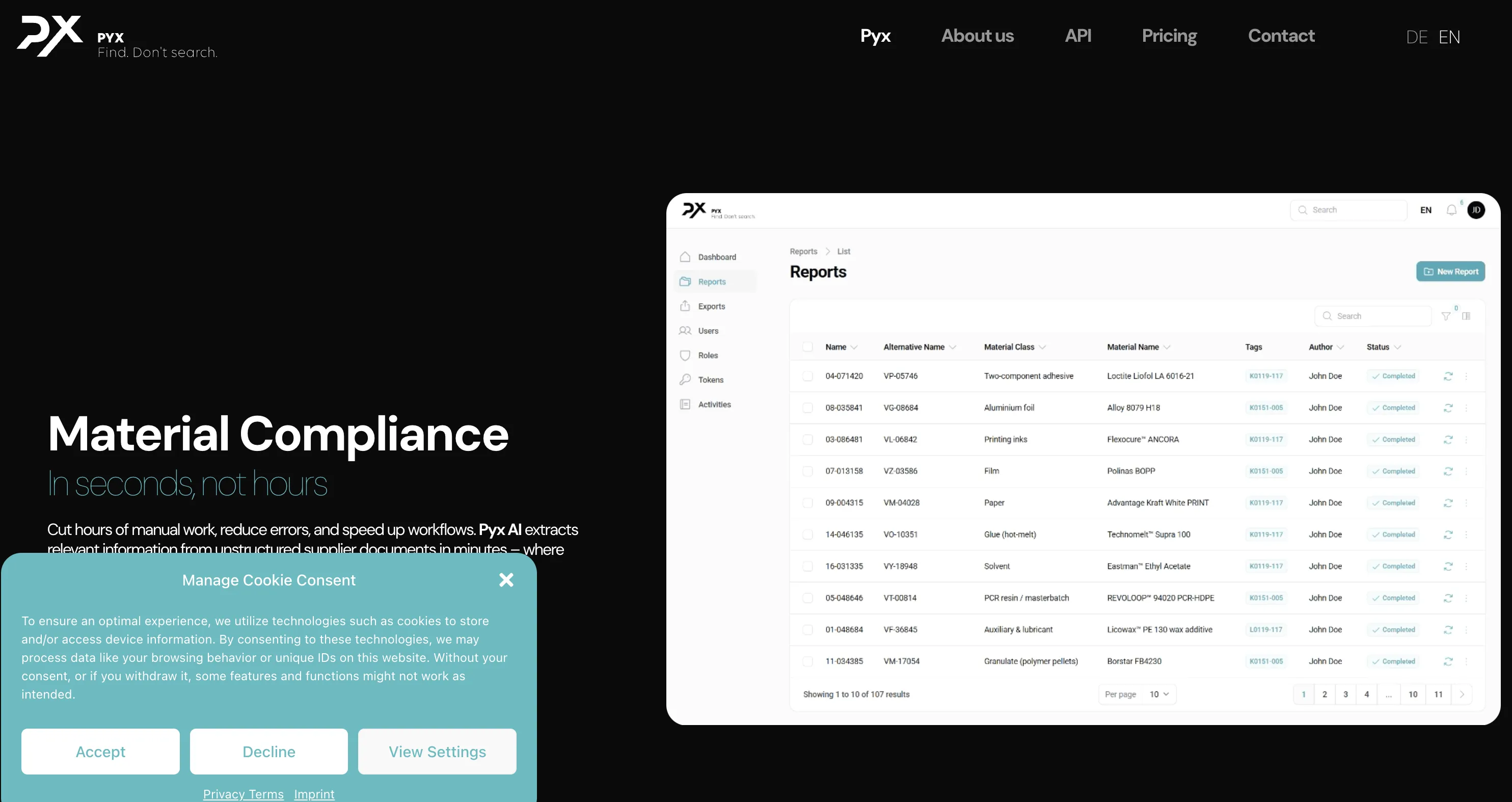

Conversational Search – Context-aware Q&A over enterprise documents with follow-up questions⚠️ Internal Focus – Designed for knowledge management, no lead capture or human handoffMulti-Language – Likely supports multiple languages, though not a headline feature⚠️ Basic Analytics – Stores chat history, fewer business insights than customer-facing tools

✅ #1 accuracy – Median 5/5 in independent benchmarks, 10% lower hallucination than OpenAI✅ Source citations – Every response includes clickable links to original documents✅ 93% resolution rate – Handles queries autonomously, reducing human workload✅ 92 languages – Native multilingual support without per-language config✅ Lead capture – Built-in email collection, custom forms, real-time notifications✅ Human handoff – Escalation with full conversation context preserved

✅ 100% Your UI – No default interface; branding baked in by design, fully white-label.No Pinecone Badge – Zero branding to hide; complete control over look and feel.Domain Control – Gating and embed rules handled in code via API keys/auth.✅ Unlimited Freedom – Pinecone ships zero CSS; style however you want.

⚠️ Minimal Branding – Logo/color tweaks only, designed as internal tool not white-label⚠️ No Embedding – Standalone interface, no domain-embed or widget options availablePyx UI Only – Look stays "Pyx AI" by design, public branding not supportedSecurity Focus – Emphasis on user management and access controls over theming

Full white-labeling included – Colors, logos, CSS, custom domains at no extra cost2-minute setup – No-code wizard with drag-and-drop interfacePersona customization – Control AI personality, tone, response style via pre-promptsVisual theme editor – Real-time preview of branding changesDomain allowlisting – Restrict embedding to approved sites only

✅ GPT-4 & Claude 3.5 – Pick model per query; supports GPT-4o, GPT-4, Claude Sonnet. [Blog] ⚠️ Manual Model Selection – No auto-routing; explicitly choose GPT-4 or Claude each request.Limited Options – GPT-3.5 not in preview; more LLMs coming soon on roadmap.Standard Vector Search – No proprietary rerank layer; raw LLM handles final answer generation.

⚠️ Undisclosed Model – Likely GPT-3.5/GPT-4 but exact model not publicly documented⚠️ No Model Selection – Cannot switch LLMs or configure speed vs accuracy tradeoffs⚠️ Single Configuration – Every query uses same model, no toggles or fine-tuningClosed Architecture – Model details, context window, capabilities hidden from users intentionally

GPT-5.1 models – Latest thinking models (Optimal & Smart variants)GPT-4 series – GPT-4, GPT-4 Turbo, GPT-4o availableClaude 4.5 – Anthropic's Opus available for EnterpriseAuto model routing – Balances cost/performance automaticallyZero API key management – All models managed behind the scenes

Developer Experience ( A P I & S D Ks)

✅ Rich SDK Support – Python, Node.js SDKs plus clean REST API. [SDK Support] Comprehensive Endpoints – Create/delete assistants, upload/list files, run chat/retrieval queries.✅ OpenAI-Compatible API – Simplifies migration from OpenAI Assistants to Pinecone Assistant.Documentation – Reference architectures and copy-paste examples for typical RAG flows.

⚠️ No API – No open API or SDKs, everything through Pyx interface⚠️ No Embedding – Cannot integrate into other apps or call programmaticallyClosed Ecosystem – No GitHub examples, community plug-ins, or extensibility optionsTurnkey Only – Great for ready-made tool, limits deep customization or extensions

REST API – Full-featured for agents, projects, data ingestion, chat queriesPython SDK – Open-source customgpt-client with full API coveragePostman collections – Pre-built requests for rapid prototypingWebhooks – Real-time event notifications for conversations and leadsOpenAI compatible – Use existing OpenAI SDK code with minimal changes

✅ Fast Retrieval – Pinecone vector DB delivers speed; GPT-4/Claude ensures quality answers.✅ Benchmarked Superior – 12% more accurate vs OpenAI Assistants via optimized retrieval. [Benchmark] Citations Reduce Hallucinations – Context plus citations tie answers to real data sources.Evaluation API – Score accuracy against gold-standard datasets for continuous improvement.

Real-Time Answers – Serves accurate responses from internal documents, sparse public benchmarksAuto-Sync Freshness – Connected repositories keep retrieval context always current automatically⚠️ Limited Transparency – No anti-hallucination metrics or advanced re-ranking details publishedCompetitive RAG – Likely comparable to standard GPT-based systems on relevance control

Sub-second responses – Optimized RAG with vector search and multi-layer cachingBenchmark-proven – 13% higher accuracy, 34% faster than OpenAI Assistants APIAnti-hallucination tech – Responses grounded only in your provided contentOpenGraph citations – Rich visual cards with titles, descriptions, images99.9% uptime – Auto-scaling infrastructure handles traffic spikes

Customization & Flexibility ( Behavior & Knowledge)

Custom System Prompts – Add persona control per call; persistent UI not in preview yet.✅ Real-Time Updates – Add, update, delete files anytime; changes reflect immediately in answers.Metadata Filtering – Narrow retrieval by tags/attributes at query time for smarter results.⚠️ Stateless Design – Long-term memory or multi-agent logic lives in your app code.

✅ Auto-Sync Updates – Knowledge base updated without manual uploads or scheduling⚠️ No Persona Controls – AI voice stays neutral, no tone or behavior customization✅ Access Controls – Strong role-based permissions, admins set document visibility per userClosed Environment – Great for content updates, limited for AI behavior or deployment

Live content updates – Add/remove content with automatic re-indexingSystem prompts – Shape agent behavior and voice through instructionsMulti-agent support – Different bots for different teamsSmart defaults – No ML expertise required for custom behavior

Usage-Based Model – Free Starter, then pay for storage/tokens/assistant fee. [Pricing] Sample Costs – ~$3/GB-month storage, $8/M input tokens, $15/M output tokens, $0.20/day per assistant.✅ Linear Scaling – Costs scale with usage; ideal for growing applications over time.Enterprise Tier – Higher concurrency, multi-region, volume discounts, custom SLAs.

Seat-Based Pricing – ~$30 per user per month, predictable monthly costs✅ Cost-Effective Small Teams – Affordable for teams under 50 users⚠️ Large Team Costs – 100 users = $3,000/month, can scale expensivelyUnlimited Content – Document/token limits not published, gated only by user seatsFree Trial + Enterprise – Hands-on trial available, custom pricing for large deployments

Standard: $99/mo – 60M words, 10 botsPremium: $449/mo – 300M words, 100 botsAuto-scaling – Managed cloud scales with demandFlat rates – No per-query charges

✅ Data Isolation – Files encrypted and siloed; never used to train models. [Privacy] ✅ SOC 2 Type II – Compliant with strong encryption and optional dedicated VPC.Full Content Control – Delete or replace content anytime; control what assistant remembers.Enterprise Options – SSO, advanced roles, custom hosting for strict compliance requirements.

✅ GDPR Compliance – Germany-based, implicit EU data protection and regional sovereignty✅ Enterprise Privacy – Data isolated per customer, encrypted in transit and rest✅ No Model Training – Customer data not used for external LLM training✅ Role-Based Access – Built-in controls, admins set document visibility per role⚠️ Limited Certifications – On-prem or SOC 2/ISO 27001/HIPAA not publicly documented

SOC 2 Type II + GDPR – Third-party audited complianceEncryption – 256-bit AES at rest, SSL/TLS in transitAccess controls – RBAC, 2FA, SSO, domain allowlistingData isolation – Never trains on your data

Observability & Monitoring

Dashboard Metrics – Shows token usage, storage, concurrency; no built-in convo analytics. [Token Usage] Evaluation API – Track accuracy over time against gold-standard benchmarks.⚠️ Manual Chat Logs – Dev teams handle chat-log storage if transcripts needed.External Integration – Easy to pipe metrics into Datadog, Splunk via API logs.

Basic Stats – User activity, query counts, top-referenced documents for admins⚠️ No Deep Analytics – No conversation analytics dashboards or real-time loggingAdoption Tracking – Useful for usage monitoring, lighter insights than full suitesSet-and-Forget – Minimal monitoring overhead, contact support for issues

Real-time dashboard – Query volumes, token usage, response timesCustomer Intelligence – User behavior patterns, popular queries, knowledge gapsConversation analytics – Full transcripts, resolution rates, common questionsExport capabilities – API export to BI tools and data warehouses

✅ Lively Community – Forums, Slack/Discord, Stack Overflow tags with active developers.Extensive Documentation – Quickstarts, RAG best practices, and comprehensive API reference.Support Tiers – Email/priority support for paid; Enterprise adds custom SLAs and engineers.Framework Integration – Smooth integration with LangChain, LlamaIndex, open-source RAG frameworks.

✅ Direct Support – Email, phone, chat with hands-on onboarding approach⚠️ No Open Community – Closed solution, no plug-ins or user-built extensionsInternal Roadmap – Product updates from Pyx only, no community marketplaceQuick Setup Focus – Emphasizes minimal admin overhead for internal knowledge search

Comprehensive docs – Tutorials, cookbooks, API referencesEmail + in-app support – Under 24hr response timePremium support – Dedicated account managers for Premium/EnterpriseOpen-source SDK – Python SDK, Postman, GitHub examples5,000+ Zapier apps – CRMs, e-commerce, marketing integrations

Additional Considerations

⚠️ Developer Platform Only – Super flexible but no off-the-shelf UI or business extras.✅ Pinecone Vector DB – Built on blazing vector database for massive data/high concurrency.Evaluation Tools – Iterate quickly on retrieval and prompt strategies with built-in testing.Custom Business Logic – No-code tools, multi-agent flows, lead capture require custom development.

✅ No-Fuss Internal Search – Employees use without coding, simple deployment for teams⚠️ Not Public-Facing – Not ideal for customer chatbots or developer-heavy customizationSiloed Environment – Single AI search environment, not broad extensible platformSimpler Scope – Less flexible than CustomGPT, but faster setup for internal use

Time-to-value – 2-minute deployment vs weeks with DIYAlways current – Auto-updates to latest GPT modelsProven scale – 6,000+ organizations, millions of queriesMulti-LLM – OpenAI + Claude reduces vendor lock-in

No- Code Interface & Usability

⚠️ Developer-Centric – No no-code editor or widget; console for quick uploads/tests only.Code Required – Must code front-end and call Pinecone API for branded chatbot.No Admin UI – No role-based admin for non-tech staff; build your own if needed.Perfect for Dev Teams – Not plug-and-play for non-coders; requires development resources.

✅ Straightforward UI – Users log in, ask questions, get answers without coding✅ No-Code Admin – Admins connect data sources, Pyx indexes automaticallyMinimal Customization – UI stays consistent and uncluttered by designInternal Q&A Hub – Perfect for employee use, not external embedding or branding

2-minute deployment – Fastest time-to-value in the industryWizard interface – Step-by-step with visual previewsDrag-and-drop – Upload files, paste URLs, connect cloud storageIn-browser testing – Test before deploying to productionZero learning curve – Productive on day one

Market Position – Developer-focused RAG backend on top-ranked vector database (billions of embeddings).Target Customers – Dev teams building custom RAG apps requiring massive scale and concurrency.Key Competitors – OpenAI Assistants API, Weaviate, Milvus, CustomGPT, Vectara, DIY solutions.✅ Competitive Advantages – Proven infrastructure, auto chunking/embedding, OpenAI-compatible API, GPT-4/Claude choice, SOC 2.Best Value For – High-volume apps needing enterprise vector search without managing infrastructure.

Market Position – Turnkey internal knowledge search (Germany), not embeddable chatbot platformTarget Customers – Small-mid European teams needing GDPR compliance and simple deploymentKey Competitors – Glean, Guru, Notion AI; not customer-facing chatbots like CustomGPT✅ Advantages – Simple scope, auto-sync, GDPR compliance, ~$30/user/month predictable pricing⚠️ Use Case Fit – Perfect for <50 user teams, not API integrations or public chatbots

Market position – Leading RAG platform balancing enterprise accuracy with no-code usability. Trusted by 6,000+ orgs including Adobe, MIT, Dropbox.Key differentiators – #1 benchmarked accuracy • 1,400+ formats • Full white-labeling included • Flat-rate pricingvs OpenAI – 10% lower hallucination, 13% higher accuracy, 34% fastervs Botsonic/Chatbase – More file formats, source citations, no hidden costsvs LangChain – Production-ready in 2 min vs weeks of development

✅ GPT-4 Support – GPT-4o and GPT-4 from OpenAI for top-tier quality.✅ Claude 3.5 Sonnet – Anthropic's safety-focused model available for all queries.⚠️ Manual Model Selection – Explicitly choose model per request; no auto-routing based on complexity.Roadmap Expansion – More LLM providers coming; GPT-3.5 not in current preview.

⚠️ Undisclosed LLM – Likely GPT-3.5/GPT-4 but model details not publicly documented⚠️ No Model Selection – Cannot switch LLMs or choose speed vs accuracy configurations⚠️ Opaque Architecture – Context window size and capabilities not exposed to usersSimplicity Focus – Hides technical complexity, users ask questions and get answers⚠️ No Fine-Tuning – Cannot customize model on domain data for specialized responses

OpenAI – GPT-5.1 (Optimal/Smart), GPT-4 seriesAnthropic – Claude 4.5 Opus/Sonnet (Enterprise)Auto-routing – Intelligent model selection for cost/performanceManaged – No API keys or fine-tuning required

✅ Automatic Chunking – Document segmentation and vector generation automatic; no manual preprocessing.✅ Pinecone Vector DB – High-speed database supporting billions of embeddings at enterprise scale.✅ Metadata Filtering – Smart retrieval using tags/attributes for narrowing results at query time.✅ Citations Reduce Hallucinations – Responses include source citations tying answers to real documents.Evaluation API – Score accuracy against gold-standard datasets for continuous quality improvement.

Conversational RAG – Context-aware search over enterprise documents with follow-up support✅ Auto-Sync – Repositories sync automatically, changes reflected almost instantlyDocument Formats – PDF, DOCX, PPT, TXT and common enterprise formats supported⚠️ No Advanced Controls – Chunking, embedding models, similarity thresholds not exposed⚠️ Limited Transparency – No citation metrics or anti-hallucination details publishedClosed System – Optimized for internal Q&A, limited visibility into retrieval architecture

GPT-4 + RAG – Outperforms OpenAI in independent benchmarksAnti-hallucination – Responses grounded in your content onlyAutomatic citations – Clickable source links in every responseSub-second latency – Optimized vector search and cachingScale to 300M words – No performance degradation at scale

Financial & Legal – Compliance assistants, portfolio analysis, case law research, contract analysis at scale.Technical Support – Documentation search for resolving issues with accurate, cited technical answers.Enterprise Knowledge – Self-serve knowledge bases for teams searching corporate documentation internally.Shopping Assistants – Help customers navigate product catalogs with semantic search capabilities.⚠️ NOT SUITABLE FOR – Non-technical teams wanting turnkey chatbot with UI; developer-centric only.

✅ Internal Knowledge Search – Employees asking questions about company documents and policies✅ Team Onboarding – New hires finding information without bothering colleagues✅ Policy Lookup – HR, compliance, operational procedure retrieval for staff✅ Small European Teams – GDPR-compliant internal search with EU data residency⚠️ NOT SUITABLE FOR – Public chatbots, customer support, API integrations, multi-channel deployment

Customer support – 24/7 AI handling common queries with citationsInternal knowledge – HR policies, onboarding, technical docsSales enablement – Product info, lead qualification, educationDocumentation – Help centers, FAQs with auto-crawlingE-commerce – Product recommendations, order assistance

✅ SOC 2 Type II – Enterprise-grade security validation from independent third-party audits.✅ HIPAA Certified – Available for healthcare applications processing PHI with appropriate agreements.Data Encryption – Files encrypted and siloed; never used to train global models.Enterprise Features – Optional dedicated VPC, SSO, advanced roles, custom hosting for compliance.

✅ GDPR Compliance – Germany-based with implicit EU data protection compliance✅ German Data Residency – EU storage location for regional data sovereignty requirements✅ Enterprise Privacy – Customer data isolated, encrypted in transit and at rest✅ Role-Based Access – Built-in controls, admins set document visibility per user⚠️ Limited Certifications – SOC 2, ISO 27001, HIPAA not publicly documented

SOC 2 Type II + GDPR – Regular third-party audits, full EU compliance256-bit AES encryption – Data at rest; SSL/TLS in transitSSO + 2FA + RBAC – Enterprise access controls with role-based permissionsData isolation – Never trains on customer dataDomain allowlisting – Restrict chatbot to approved domains

Free Starter Tier – 1GB storage, 200K output tokens, 1.5M input tokens for evaluation/development.Standard Plan – $50/month minimum with pay-as-you-go beyond minimum usage credits included.Token & Storage Costs – ~$8/M input, ~$15/M output tokens, ~$3/GB-month storage, $0.20/day per assistant.✅ Linear Scaling – Costs scale with usage; Enterprise adds volume discounts and multi-region.

Seat-Based Pricing – ~$30 per user per month✅ Small Team Value – Affordable for teams under 50 users, predictable costs⚠️ Scalability Cost – 100 users = $3,000/month, expensive for large organizationsUnlimited Content – No published document limits, gated only by user seatsFree Trial + Enterprise – Evaluation available, custom pricing for volume discounts

Standard: $99/mo – 10 chatbots, 60M words, 5K items/botPremium: $449/mo – 100 chatbots, 300M words, 20K items/botEnterprise: Custom – SSO, dedicated support, custom SLAs7-day free trial – Full Standard access, no chargesFlat-rate pricing – No per-query charges, no hidden costs

✅ Comprehensive Docs – docs.pinecone.io with guides, API reference, and copy-paste RAG examples.Developer Community – Forums, Slack/Discord channels, and Stack Overflow tags for peer support.Python & Node SDKs – Feature-rich libraries with clean REST API fallback option.Enterprise Support – Email/priority support for paid tiers with custom SLAs for Enterprise.

✅ Direct Support – Email, phone, chat with hands-on onboarding approach✅ Quick Deployment – Minimal admin overhead, connect sources and start asking questions⚠️ No Open Community – Closed solution, no plug-ins or user extensions⚠️ No Developer Docs – No API documentation or programmatic access guidesInternal Roadmap – Updates from Pyx only, no user-contributed features

Documentation hub – Docs, tutorials, API referencesSupport channels – Email, in-app chat, dedicated managers (Premium+)Open-source – Python SDK, Postman, GitHub examplesCommunity – User community + 5,000 Zapier integrations

Limitations & Considerations

⚠️ Developer-Centric – No no-code editor or chat widget; requires coding for UI.⚠️ Stateless Architecture – Long-term memory, multi-agent flows, conversation state in app code.⚠️ Limited Models – GPT-4 and Claude 3.5 only; GPT-3.5 not in preview.File Restrictions – Scanned PDFs and OCR not supported; images in documents ignored.⚠️ NO Business Features – No lead capture, handoff, or chat logs; pure RAG backend.

⚠️ No Public API – Cannot embed or call programmatically, standalone UI only⚠️ No Messaging Integrations – No Slack, Teams, WhatsApp or chat platform connectors⚠️ Limited Branding – Minimal customization, not white-label solution for public deployment⚠️ No Advanced Controls – Cannot configure RAG parameters, model selection, retrieval strategies⚠️ Seat-Based Scaling – Expensive for large orgs vs usage-based pricing models✅ Best For – Small European teams (<50 users) prioritizing simplicity and GDPR over flexibility

Managed service – Less control over RAG pipeline vs build-your-ownModel selection – OpenAI + Anthropic only; no Cohere, AI21, open-sourceReal-time data – Requires re-indexing; not ideal for live inventory/pricesEnterprise features – Custom SSO only on Enterprise plan

✅ Context API – Delivers structured context with relevancy scores for agentic systems requiring verification.✅ MCP Server Integration – Every Assistant is MCP server; connect as context tool since Nov 2024.Custom Instructions – Metadata filters restrict vector search; instructions tailor responses with directives.Retrieval-Only Mode – Use purely for context retrieval; agents gather info then process with logic.⚠️ Agent Limitations – Stateless design; orchestration logic, multi-agent coordination in application layer.

⚠️ NO Agent Capabilities – No autonomous agents, tool calling, or multi-agent orchestrationConversational Search Only – Context-aware dialogue for Q&A, not agentic or autonomous behaviorBasic RAG Architecture – Standard retrieval without function calling, tool use, or workflows⚠️ No External Actions – Cannot invoke APIs, execute code, query databases, or interact externallyInternal Knowledge Focus – Employee Q&A about documents, not task automation or workflows

Custom AI Agents – Autonomous GPT-4/Claude agents for business tasksMulti-Agent Systems – Specialized agents for support, sales, knowledgeMemory & Context – Persistent conversation history across sessionsTool Integration – Webhooks + 5,000 Zapier apps for automationContinuous Learning – Auto re-indexing without manual retraining

R A G-as-a- Service Assessment

✅ TRUE RAG-AS-A-SERVICE – Managed backend API abstracting chunking, embedding, storage, retrieval, reranking, generation.API-First Service – Pure backend with Python/Node SDKs; developers build custom front-ends on top.✅ Pinecone Vector DB Foundation – Built on proven database supporting billions of embeddings at enterprise scale.OpenAI-Compatible – Simplifies migration from OpenAI Assistants to Pinecone Assistant seamlessly.⚠️ Key Difference – No no-code UI/widgets vs full-stack platforms (CustomGPT) with embeddable chat.

⚠️ NOT TRUE RAG-AS-A-SERVICE – Standalone internal app, not API-accessible RAG platformTurnkey Application – Self-contained Q&A tool vs developer-accessible RAG infrastructure⚠️ No API Access – No REST API, SDKs, programmatic access unlike CustomGPT/VectaraClosed Application – Web/desktop interface only, cannot build custom applications on topSaaS vs RaaS – Software-as-a-Service (standalone app) NOT Retrieval-as-a-Service (API infrastructure)Best Comparison Category – Internal search tools (Glean, Guru), not developer RAG platforms

Platform type – TRUE RAG-AS-A-SERVICE with managed infrastructureAPI-first – REST API, Python SDK, OpenAI compatibility, MCP ServerNo-code option – 2-minute wizard deployment for non-developersHybrid positioning – Serves both dev teams (APIs) and business users (no-code)Enterprise ready – SOC 2 Type II, GDPR, WCAG 2.0, flat-rate pricing

Join the Discussion

Loading comments...