Data Ingestion & Knowledge Sources

✅ Enterprise Integrations – APIs connect to Snowflake, Databricks, Salesforce, data lakes✅ High Volume Processing – Async APIs handle millions/billions of records efficientlyPII/PHI Scanning – Detects sensitive data across structured and unstructured sources⚠️ No File Uploads – Designed for data pipelines, not document upload workflows

✅ Ready-Made Connectors – Google Drive, Gmail, Notion, Confluence auto-sync data automatically✅ Multi-Format Upload – PDF, DOCX, TXT, Markdown, URL/sitemap crawling supported✅ Automatic Retraining – Manual or automatic knowledge base updates keep RAG current✅ Real-Time Indexing – Launch RAG pipelines with immediate content updates and synchronization

1,400+ file formats – PDF, DOCX, Excel, PowerPoint, Markdown, HTML + auto-extraction from ZIP/RAR/7Z archivesWebsite crawling – Sitemap indexing with configurable depth for help docs, FAQs, and public contentMultimedia transcription – AI Vision, OCR, YouTube/Vimeo/podcast speech-to-text built-inCloud integrations – Google Drive, SharePoint, OneDrive, Dropbox, Notion with auto-syncKnowledge platforms – Zendesk, Freshdesk, HubSpot, Confluence, Shopify connectorsMassive scale – 60M words (Standard) / 300M words (Premium) per bot with no performance degradation

Security Middleware – API layer sanitizes data before reaching any LLM✅ Data Pipeline Integration – Works with Snowflake, Kafka, Databricks for AI workflows⚠️ No Chat Widgets – Backend security layer, not end-user interface platform

✅ Multi-Channel – Slack, Telegram, WhatsApp, Facebook Messenger, Microsoft Teams, chat widget✅ Webhooks & Zapier – External actions: tickets, CRM updates, workflow automation✅ Support Workflows – Real-time chat, easy escalation, customer-support focused design⚠️ No Native UI – RAG API platform requires custom chat interface development

Website embedding – Lightweight JS widget or iframe with customizable positioningCMS plugins – WordPress, WIX, Webflow, Framer, SquareSpace native support5,000+ app ecosystem – Zapier connects CRMs, marketing, e-commerce toolsMCP Server – Integrate with Claude Desktop, Cursor, ChatGPT, WindsurfOpenAI SDK compatible – Drop-in replacement for OpenAI API endpointsLiveChat + Slack – Native chat widgets with human handoff capabilities

⚠️ Not a Chatbot – Detects and masks sensitive data, doesn't generate responses✅ Advanced NER + Regex – Spots PII/PHI while preserving context and accuracyContent Moderation – Safety checks ensure compliance and prevent data exposure

✅ RAG Architecture – Context-aware answers from your data only, reduces hallucinations significantly✅ Multi-Turn Context – Full session history, 95+ languages out of box✅ Lead Capture – Automatic lead capture with human escalation on demand✅ Fallback Handling – Human handoff and messages when bot confidence low

✅ #1 accuracy – Median 5/5 in independent benchmarks, 10% lower hallucination than OpenAI✅ Source citations – Every response includes clickable links to original documents✅ 93% resolution rate – Handles queries autonomously, reducing human workload✅ 92 languages – Native multilingual support without per-language config✅ Lead capture – Built-in email collection, custom forms, real-time notifications✅ Human handoff – Escalation with full conversation context preserved

⚠️ No Visual Branding – Backend middleware, no UI to customize or brand✅ Policy Customization – Tailor masking rules via dashboard or config filesCompliance-Focused – Configure policies to match GDPR, HIPAA, PCI DSS requirements

✅ Widget Customization – Logos, colors, welcome text, icons match brand perfectly✅ White-Label – Remove Ragie branding entirely for clean deployment✅ Domain Allowlisting – Lock bot to approved sites for security⚠️ Moderate Customization – Not as extensive as fully white-labeled custom solutions

Full white-labeling included – Colors, logos, CSS, custom domains at no extra cost2-minute setup – No-code wizard with drag-and-drop interfacePersona customization – Control AI personality, tone, response style via pre-promptsVisual theme editor – Real-time preview of branding changesDomain allowlisting – Restrict embedding to approved sites only

✅ Model-Agnostic – Works with any LLM: GPT, Claude, LLaMA, Gemini, custom models✅ LangChain Integration – Orchestrates multi-model workflows and complex AI pipelines✅ Context-Preserving – Maintains 99% accuracy (RARI) despite masking sensitive data

✅ OpenAI GPT-4o – Primary "accurate" mode for depth, advanced reasoning, quality✅ GPT-4o-mini – "Fast" mode balances quality with speed for volume✅ Claude 3.5 Sonnet – Confirmed support through RAG-as-a-Service architecture integration✅ Mode Toggle – Switch fast/accurate modes per chatbot without code changes⚠️ No Model Agnosticism – OpenAI/Claude only; no Llama, Mistral, custom deployment

GPT-5.1 models – Latest thinking models (Optimal & Smart variants)GPT-4 series – GPT-4, GPT-4 Turbo, GPT-4o availableClaude 4.5 – Anthropic's Opus available for EnterpriseAuto model routing – Balances cost/performance automaticallyZero API key management – All models managed behind the scenes

Developer Experience ( A P I & S D Ks)

✅ REST APIs + Python SDK – Straightforward scanning, masking, and tokenizing implementationDetailed Documentation – Step-by-step guides for data pipelines and AI appsReal-Time + Batch – Supports ETL, CI/CD pipelines with comprehensive examples

✅ REST API – Complete coverage: bot management, data ingestion, answers, clear docs✅ TypeScript/Python SDKs – Official SDKs for production-grade RAG development workflows✅ No-Code Builder – Drag-and-drop dashboard for non-devs, API for heavy lifting✅ SourceSync API – Headless RAG layer for fully customizable retrieval backends

REST API – Full-featured for agents, projects, data ingestion, chat queriesPython SDK – Open-source customgpt-client with full API coveragePostman collections – Pre-built requests for rapid prototypingWebhooks – Real-time event notifications for conversations and leadsOpenAI compatible – Use existing OpenAI SDK code with minimal changes

✅ 99% RARI Accuracy – Context-preserving masking vs 70% vanilla masking accuracy✅ Low Latency – Async APIs and auto-scaling maintain performance at high volumeSemantic Preservation – Masked data retains context for accurate LLM responses

✅ Hybrid Search – Re-ranking, smart partitioning, semantic + keyword retrieval✅ Fast/Accurate Modes – Speed-optimized or depth-focused responses per configuration✅ Citation Support – Answers grounded in sources with traceable references✅ Entity Extraction – Structured data from unstructured documents for advanced querying

Sub-second responses – Optimized RAG with vector search and multi-layer cachingBenchmark-proven – 13% higher accuracy, 34% faster than OpenAI Assistants APIAnti-hallucination tech – Responses grounded only in your provided contentOpenGraph citations – Rich visual cards with titles, descriptions, images99.9% uptime – Auto-scaling infrastructure handles traffic spikes

Customization & Flexibility ( Behavior & Knowledge)

✅ Custom Regex Rules – Fine-tune masking with granular entity types and patterns✅ Role-Based Access – Privileged users see unmasked data, others see tokensDynamic Policies – Update masking rules without model retraining for new regulations

✅ KB Updates – Hit "retrain," recrawl, upload files anytime in dashboard✅ Personas & Prompts – Set tone, style, quick prompts for behavior✅ Multiple Bots – Spin up bots per team/domain under one account✅ Functions Feature – Perform actions (tickets, CRM) directly in chat

Live content updates – Add/remove content with automatic re-indexingSystem prompts – Shape agent behavior and voice through instructionsMulti-agent support – Different bots for different teamsSmart defaults – No ML expertise required for custom behavior

Enterprise Pricing – Custom quotes based on data volume and throughput✅ Massive Scale – Handles millions/billions of records, cloud or on-prem deploymentVolume Discounts – Free trial available, pricing optimized for large organizations

✅ Growth Plan – ~$79/month for small teams, basic multi-channel support✅ Pro/Scale Plan – ~$259/month with expanded capacity, messages, bots, crawls✅ Enterprise Plan – Custom pricing for large deployments, dedicated support, SLAs✅ Smooth Scaling – Message credits scale costs with usage, no linear explosions✅ 7-Day Free Trial – Full feature access to test everything risk-free

Standard: $99/mo – 60M words, 10 botsPremium: $449/mo – 300M words, 100 botsAuto-scaling – Managed cloud scales with demandFlat rates – No per-query charges

✅ Privacy-First – Masks PII/PHI before LLM access, meets GDPR/HIPAA/PCI DSS✅ End-to-End Encryption – TLS in transit, encryption at rest with audit logs✅ Deployment Flexibility – Public cloud, private cloud, or on-prem for data residency

✅ HTTPS/TLS & Encryption – Industry standard in-transit, data-at-rest encryption protection✅ Workspace Isolation – Customer data stays isolated, no cross-tenant leakage✅ SOC 2/GDPR/HIPAA – Type II certified, GDPR/HIPAA/CASA/CCPA compliant infrastructure✅ Access Controls – Dashboard permissions, API key management, audit logging⚠️ Cloud-Only SaaS – No on-premise/air-gapped deployment options for regulated industries

SOC 2 Type II + GDPR – Third-party audited complianceEncryption – 256-bit AES at rest, SSL/TLS in transitAccess controls – RBAC, 2FA, SSO, domain allowlistingData isolation – Never trains on your data

Observability & Monitoring

Comprehensive Audit Logs – Tracks every masking action and sensitive data detection✅ SIEM Integration – Real-time compliance and performance monitoring with alertingRARI Metrics – Reports accuracy preservation and data protection effectiveness

✅ Dashboard Metrics – Chat histories, sentiment, key performance indicators displayed✅ Daily Digests – Email summaries keep team informed without logins⚠️ Basic Analytics – Not as comprehensive as dedicated conversation analytics platforms

Real-time dashboard – Query volumes, token usage, response timesCustomer Intelligence – User behavior patterns, popular queries, knowledge gapsConversation analytics – Full transcripts, resolution rates, common questionsExport capabilities – API export to BI tools and data warehouses

✅ Enterprise Support – Dedicated account managers and SLA-backed assistanceRich Documentation – API guides, whitepapers, and secure AI pipeline best practicesIndustry Partnerships – Active thought leadership and compliance standards collaboration

✅ Email Support – 24-48hr response; faster for Enterprise customers✅ Submit Request Form – Feature requests, integration suggestions, custom needs✅ Partner Program – Agency partnerships for consultants, resellers, ecosystem growth✅ Live Demo – Interactive environment for evaluating platform before trial⚠️ No Phone Support – Email-based on standard plans; phone likely Enterprise-only

Comprehensive docs – Tutorials, cookbooks, API referencesEmail + in-app support – Under 24hr response timePremium support – Dedicated account managers for Premium/EnterpriseOpen-source SDK – Python SDK, Postman, GitHub examples5,000+ Zapier apps – CRMs, e-commerce, marketing integrations

Additional Considerations

✅ Secure RAG Focus – Protects sensitive data in third-party LLMs while preserving context✅ On-Prem Deployment – Total isolation for highly regulated sectorsProprietary RARI Metric – Proves aggressive masking maintains 99% model accuracy

✅ Functions Feature – Bot performs real actions (tickets, CRM) in chat✅ Headless API – SourceSync gives devs fully customizable retrieval layer✅ Free Developer Tier – Test production-grade RAG infrastructure without commitment⚠️ Functions Complexity – Advanced workflows require technical setup, not fully no-code

Time-to-value – 2-minute deployment vs weeks with DIYAlways current – Auto-updates to latest GPT modelsProven scale – 6,000+ organizations, millions of queriesMulti-LLM – OpenAI + Claude reduces vendor lock-in

No- Code Interface & Usability

⚠️ No Chatbot Builder – Technical dashboard for policy setup, not end-user interfaceIT/Security Focus – Config panels for technical teams, not wizard-style tools✅ Guided Presets – HIPAA Mode, GDPR Mode for rapid compliance onboarding

✅ Guided Dashboard – Paste URL or upload files, up running fast✅ Pre-Built Templates – Live demo, simple embed snippet for painless deployment✅ In-Platform Guidance – Visual walkthrough of configuration, deployment for no-code users✅ Knowledge Base – Self-service docs covering setup, integrations, troubleshooting guides

2-minute deployment – Fastest time-to-value in the industryWizard interface – Step-by-step with visual previewsDrag-and-drop – Upload files, paste URLs, connect cloud storageIn-browser testing – Test before deploying to productionZero learning curve – Productive on day one

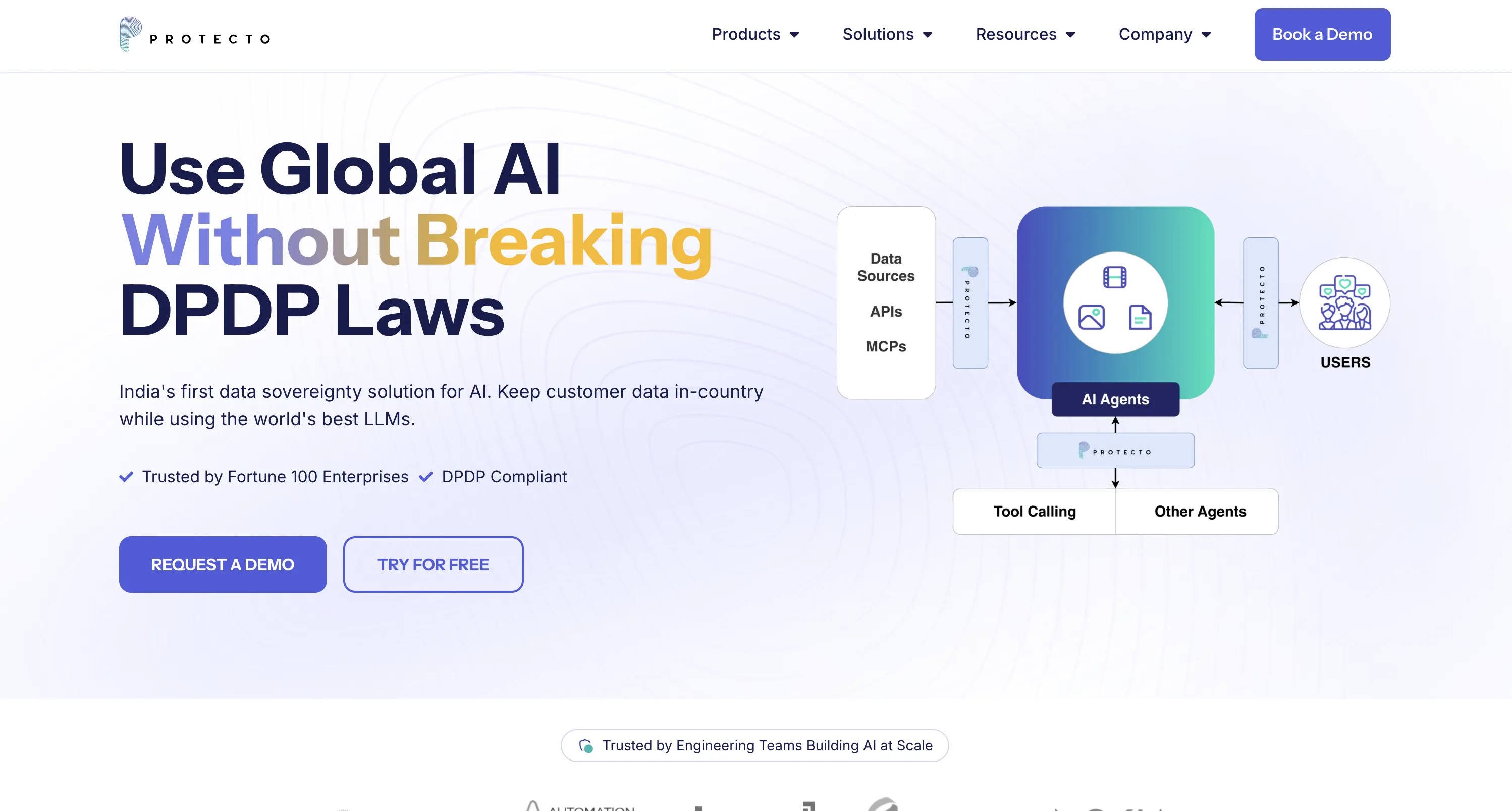

Market position: Enterprise data security middleware for AI, not RAG platformTarget customers: Healthcare, finance, government needing GDPR/HIPAA/PCI compliance and on-prem deploymentKey competitors: Presidio (Microsoft), Private AI, Nightfall AI, traditional DLP tools✅ Competitive advantages: 99% RARI vs 70% vanilla, handles billions of recordsPricing advantage: Higher cost but prevents regulatory fines (GDPR €20M, HIPAA $1.5M)Use case fit: Critical for healthcare PII/PHI, financial records, government data compliance

✅ Market Position – Developer-friendly RAG balancing no-code dashboard with API flexibility✅ Target Customers – SMBs needing quick chatbot, multi-channel teams, devs wanting flexibility✅ Key Competitors – Chatbase.co, Botsonic, SiteGPT, CustomGPT, SMB no-code chatbot platforms✅ Competitive Advantages – Hybrid search, SourceSync API, Functions, 95+ languages, ready connectors✅ Pricing Advantage – Mid-range $79-$259/month, straightforward tiers, smooth scaling, best value✅ Use Case Fit – Multi-channel support, simple REST API, webhook/Zapier CRM/ticket integration

Market position – Leading RAG platform balancing enterprise accuracy with no-code usability. Trusted by 6,000+ orgs including Adobe, MIT, Dropbox.Key differentiators – #1 benchmarked accuracy • 1,400+ formats • Full white-labeling included • Flat-rate pricingvs OpenAI – 10% lower hallucination, 13% higher accuracy, 34% fastervs Botsonic/Chatbase – More file formats, source citations, no hidden costsvs LangChain – Production-ready in 2 min vs weeks of development

✅ Model-Agnostic: Works with GPT-4, Claude, LLaMA, Gemini, custom modelsPre-Processing Layer: Masks data before LLM access, not tied to providers✅ LangChain Integration: Orchestrates multi-model workflows and complex AI pipelines✅ Context-Preserving: 99% RARI vs 70% vanilla masking accuracyNo Lock-In: Switch LLM providers without changing Protecto configuration

✅ OpenAI GPT-4o – "Accurate" mode for depth, comprehensive analysis, highest quality✅ GPT-4o-mini – "Fast" mode balances quality with rapid response times✅ Claude 3.5 Sonnet – Anthropic integration enables Claude model deployment in production✅ 2024 Models – Updated for latest including gpt-4o-mini long-context improvements⚠️ Limited Selection – Only GPT-4o/mini toggle; no multi-model routing by complexity

OpenAI – GPT-5.1 (Optimal/Smart), GPT-4 seriesAnthropic – Claude 4.5 Opus/Sonnet (Enterprise)Auto-routing – Intelligent model selection for cost/performanceManaged – No API keys or fine-tuning required

⚠️ NOT A RAG PLATFORM: Security middleware only, not retrieval-augmented generation platformRAG Protection Layer: Masks PII/PHI before RAG indexing and vector database storage✅ Real-Time Sanitization: Intercepts data to/from RAG systems preventing sensitive data leakage✅ Context Preservation: Maintains semantic meaning for accurate RAG retrieval despite maskingQuery + Response Security: Masks sensitive data in queries and post-processes responsesIntegration Point: Security middleware between data sources and RAG platforms

✅ Hybrid Search – Semantic vector + keyword retrieval for comprehensive document matching✅ Re-Ranking Engine – Surfaces most relevant content from retrieved docs✅ Smart Partitioning – Intelligent chunking for optimized retrieval across large KBs✅ Citation Support – Answers grounded in sources with traceable transparency✅ 95+ Languages – Multilingual RAG without separate configurations for global bases⚠️ Retraining Workflow – Manual retraining unless automatic mode enabled, not real-time

GPT-4 + RAG – Outperforms OpenAI in independent benchmarksAnti-hallucination – Responses grounded in your content onlyAutomatic citations – Clickable source links in every responseSub-second latency – Optimized vector search and cachingScale to 300M words – No performance degradation at scale

Healthcare AI: HIPAA-compliant patient analysis, clinical support, PHI masking in medical recordsFinancial Services: PCI DSS payment data compliance, financial records, customer service chatbotsGovernment & Defense: Classified data protection, citizen privacy, strict data residency requirementsCustomer Support: Secure analysis of tickets, emails, transcripts with PII for AI insightsMulti-Agent Workflows: Role-based data access across AI agents for global enterprisesClaims Processing: Insurance PHI protection for accurate, privacy-preserving RAG workflows

✅ Customer Support – Self-service bots from help articles, reduce tickets up to 70%✅ Internal Assistants – Employee-facing AI with Google Drive, Notion, Confluence knowledge✅ Multi-Channel Support – Unified deployment: Slack, Telegram, WhatsApp, Messenger, Teams✅ Website Widgets – Real-time engagement, lead capture, instant question answering✅ CRM Integration – Functions create tickets, update CRM, trigger workflows from chat

Customer support – 24/7 AI handling common queries with citationsInternal knowledge – HR policies, onboarding, technical docsSales enablement – Product info, lead qualification, educationDocumentation – Help centers, FAQs with auto-crawlingE-commerce – Product recommendations, order assistance

✅ GDPR/HIPAA/PCI DSS: Pre-configured policies, BAA support, Safe Harbor PHI maskingPDPL/DPDP Compliance: Saudi Arabia PDPL, India DPDP with regional policies✅ End-to-End Encryption: TLS in transit, encryption at rest with audit logs✅ Role-Based Access: Privileged users see unmasked data, others see tokens✅ Deployment Flexibility: SaaS, VPC, on-prem for strict data residencyZero Data Egress: On-prem ensures data never leaves organizational boundaries

✅ AES-256 & TLS – Encryption at rest and in transit, zero training use✅ SOC 2 Type II – Certified for GDPR, HIPAA, CASA, CCPA compliance✅ Domain Allowlisting – Lock chatbots to approved domains for security✅ Audit Logging – Activity tracking for compliance monitoring, incident investigation⚠️ Cloud-Only – No on-premise for air-gapped/highly regulated requirements

SOC 2 Type II + GDPR – Regular third-party audits, full EU compliance256-bit AES encryption – Data at rest; SSL/TLS in transitSSO + 2FA + RBAC – Enterprise access controls with role-based permissionsData isolation – Never trains on customer dataDomain allowlisting – Restrict chatbot to approved domains

Enterprise Pricing: Custom quotes based on volume, throughput, deployment model✅ Free Trial: Test platform capabilities before commitment with hands-on evaluationVolume Discounts: Pricing scales with usage, better rates for higher volumesCost Justification: Prevents regulatory fines (GDPR €20M, HIPAA $1.5M penalties)⚠️ No Public Pricing: Contact sales for custom quotes tailored to needs

✅ Free Trial – 7 days full access, test everything risk-free✅ Growth – ~$79/month for small teams starting chatbot deployment✅ Pro/Scale – ~$259/month expanded capacity: messages, bots, crawls, uploads✅ Enterprise – Custom pricing for large deployments, dedicated support, SLAs✅ Transparent Pricing – Straightforward tiers without hidden fees or confusing per-feature charges

Standard: $99/mo – 10 chatbots, 60M words, 5K items/botPremium: $449/mo – 100 chatbots, 300M words, 20K items/botEnterprise: Custom – SSO, dedicated support, custom SLAs7-day free trial – Full Standard access, no chargesFlat-rate pricing – No per-query charges, no hidden costs

✅ Enterprise Support: Dedicated account managers, SLA-backed assistance for large deploymentsComprehensive Docs: REST API, Python SDK, integration guides for data pipelinesWhitepapers & Best Practices: Security frameworks, compliance guides, AI pipeline architecturesIntegration Guides: Snowflake, Databricks, Kafka, LangChain, CrewAI, model gatewaysProfessional Services: Implementation help, custom policy setup, security workflow design✅ Training Resources: HIPAA Mode, GDPR Mode presets for rapid deployment

✅ Email Support – 24-48hr standard response; faster for Enterprise tier✅ REST API Docs – Clear documentation with live examples covering all endpoints✅ Daily Digests – Automated performance summaries, conversation metrics without logins✅ Partner Program – Agency partnerships for consultants, implementers, resellers ecosystem⚠️ No Phone Support – Email-based only on standard plans; phone Enterprise-reserved

Documentation hub – Docs, tutorials, API referencesSupport channels – Email, in-app chat, dedicated managers (Premium+)Open-source – Python SDK, Postman, GitHub examplesCommunity – User community + 5,000 Zapier integrations

Limitations & Considerations

⚠️ NOT A RAG PLATFORM: Requires separate RAG/LLM infrastructure for complete solution⚠️ NO Chat UI: Technical dashboard only, not end-user chatbot interface⚠️ Developer Integration Required: APIs/SDKs need coding expertise for pipeline integrationHigher Cost: Enterprise pricing but prevents GDPR €20M, HIPAA $1.5M finesPerformance Overhead: Real-time masking adds sub-second latency in high-throughput systemsBest For: Regulated industries (healthcare, finance, government) requiring compliance, not general-purpose

⚠️ OpenAI/Claude Only – Cannot deploy Llama, Mistral, custom open-source models⚠️ Cloud-Only – No self-hosting, on-premise, air-gapped for regulated industries⚠️ Message Credit Caps – High-volume requires plan upgrades or Enterprise pricing⚠️ Crawler Limits – URL/sitemap scope limited by plan tier, large sites need higher⚠️ Emerging Platform – Newer vs established competitors, smaller integration ecosystem

Managed service – Less control over RAG pipeline vs build-your-ownModel selection – OpenAI + Anthropic only; no Cohere, AI21, open-sourceReal-time data – Requires re-indexing; not ideal for live inventory/pricesEnterprise features – Custom SSO only on Enterprise plan

✅ Multi-Agent Access Control: Fine-grained identity-based access enforcement across agentic workflows✅ Role-Based Security: Controls who sees what at inference time with role-specific permissionsLangChain/CrewAI Integration: Comprehensive agentic workflow protection with major orchestration frameworksAgent Context Sanitization: Masks PII/PHI in prompts, context, and responses during multi-step reasoningSecRAG for Agents: RBAC integrated into retrieval, checks authorization before agent access⚠️ NOT Agent Orchestration: Secures workflows but requires LangChain/CrewAI for coordination

✅ Agentic Retrieval – Multi-step engine: decomposes queries, self-checks, compiles cited answers✅ MCP Server – Context-Aware descriptions enable accurate agent tool routing decisions✅ Multi-Step Reasoning – Sequential retrieval operations with self-validation for complex queries✅ Summary Index – Avoid document affinity problems through intelligent summarization⚠️ No Built-In UI – API platform requires custom chat interfaces, not turnkey

Custom AI Agents – Autonomous GPT-4/Claude agents for business tasksMulti-Agent Systems – Specialized agents for support, sales, knowledgeMemory & Context – Persistent conversation history across sessionsTool Integration – Webhooks + 5,000 Zapier apps for automationContinuous Learning – Auto re-indexing without manual retraining

R A G-as-a- Service Assessment

⚠️ NOT RAG-AS-A-SERVICE: Data security middleware, not retrieval-augmented generation platformSecurity Middleware: Sits between data sources and RAG platforms as protection layerRAG Protection: Sanitizes documents before indexing, queries before retrieval, responses before delivery✅ Context-Preserving RAG: 99% RARI vs 70% vanilla masking for accurate retrievalStack Position: Protecto (security) + CustomGPT/Vectara (RAG) + OpenAI (LLM) = complete solutionBest Comparison: Compare to Presidio, Private AI, Nightfall AI, not RAG platforms

✅ Platform Type – TRUE RAG-AS-A-SERVICE API platform, August 2024, $5.5M seed✅ Core Mission – Developers build AI apps connected to data, outstanding RAG results✅ API-First Architecture – TypeScript/Python SDKs, reliable ingest, latest RAG techniques chunking/re-ranking✅ RAG Leadership – Summary Index, Entity Extraction, Agentic Retrieval, MCP Server✅ Managed Service – Free dev tier, pro for production, enterprise scale, no infrastructure⚠️ vs No-Code – No native widgets/Slack/WhatsApp/builders/analytics/lead capture, requires custom UI

Platform type – TRUE RAG-AS-A-SERVICE with managed infrastructureAPI-first – REST API, Python SDK, OpenAI compatibility, MCP ServerNo-code option – 2-minute wizard deployment for non-developersHybrid positioning – Serves both dev teams (APIs) and business users (no-code)Enterprise ready – SOC 2 Type II, GDPR, WCAG 2.0, flat-rate pricing

Join the Discussion

Loading comments...